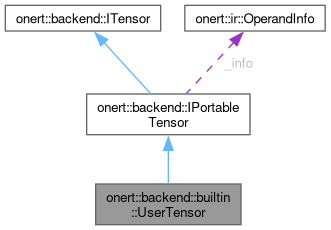

Tensor object that is for Input and Output tensors from the user. More...

#include <UserTensor.h>

Public Member Functions | |

| UserTensor (const ir::OperandInfo &info, uint8_t *buffer, size_t size) | |

| uint8_t * | buffer () const override |

| void | set_dynamic () override |

| set this tensor dynamic | |

| void | setShape (const ir::Shape &new_shape) override |

| Set the shape of tenser to new_shape. | |

| bool | applyShape (const ir::Shape &) override |

Set the shape to shape and possibly re-allocate the buffer. | |

Public Member Functions inherited from onert::backend::IPortableTensor Public Member Functions inherited from onert::backend::IPortableTensor | |

| IPortableTensor (const ir::OperandInfo &info) | |

| virtual | ~IPortableTensor () |

| const ir::OperandInfo & | get_info () const |

| const ir::Sparsity * | sparsity () const |

| size_t | total_size () const override final |

| size_t | calcOffset (const ir::Coordinates &coords) const override final |

| ir::DataType | data_type () const override final |

| float | data_scale () const override final |

| int32_t | data_zero_point () const override final |

| const std::vector< float > & | data_scales () const override final |

| const std::vector< int32_t > & | data_zero_points () const override |

| bool | is_constant () const override final |

| Return true if the tensor is constant. | |

| bool | is_dynamic () const override final |

| Return true if the tensor needs dynamic allocation, meaning that during compile-time the outpus shape cannot be known and the output shape is calculated during kernel execution-time. | |

| ir::Shape | getShape () const override final |

| Get ir::Shape of tensor. | |

| bool | has_padding () const final |

| void | access (const std::function< void(ITensor &tensor)> &fn) final |

Public Member Functions inherited from onert::backend::ITensor Public Member Functions inherited from onert::backend::ITensor | |

| virtual | ~ITensor () |

| virtual void | deallocBuffer () |

| Dealloc the buffer (only for dynamic tensors) | |

| virtual bool | is_subtensor () const |

| virtual bool | needMemoryMap () const |

| virtual void | enqueueWriteBuffer (const void *, bool) |

| virtual void | enqueueReadBuffer (void *, bool) |

Additional Inherited Members | |

Protected Attributes inherited from onert::backend::IPortableTensor Protected Attributes inherited from onert::backend::IPortableTensor | |

| ir::OperandInfo | _info |

Detailed Description

Tensor object that is for Input and Output tensors from the user.

This class is a wrapped buffer that is allocated by the user. So it does not have resposibility on allocation nor deallocation. All the model input/output tensors are wrapped with this class for execution.

Definition at line 34 of file UserTensor.h.

Constructor & Destructor Documentation

◆ UserTensor()

|

inline |

Definition at line 37 of file UserTensor.h.

Member Function Documentation

◆ applyShape()

|

overridevirtual |

Set the shape to shape and possibly re-allocate the buffer.

If a tensor is dynamic tensor and previously allocated memory exists, it will be deallocated. If a tensor is static tensor (with previously allocated memory by StaticTensorManager), buffer() will be overwriten

- Parameters

-

shape tensor's new shape. While allocating memory for this new_shape, tensor's shape is set to new_shape

- Returns

- true If applying shape is successful

- false If not applying shape is not supported (it throws for other errors)

Reimplemented from onert::backend::ITensor.

Definition at line 25 of file UserTensor.cc.

References onert::backend::IPortableTensor::data_type(), setShape(), and onert::ir::sizeOfDataType().

◆ buffer()

|

inlineoverridevirtual |

Implements onert::backend::ITensor.

Definition at line 43 of file UserTensor.h.

◆ set_dynamic()

|

inlineoverridevirtual |

set this tensor dynamic

Reimplemented from onert::backend::ITensor.

Definition at line 44 of file UserTensor.h.

References onert::backend::IPortableTensor::_info, and onert::ir::OperandInfo::setDynamic().

◆ setShape()

|

inlineoverridevirtual |

Set the shape of tenser to new_shape.

- Note

- Higer dimension will be placed on front.

Reimplemented from onert::backend::ITensor.

Definition at line 45 of file UserTensor.h.

References onert::backend::IPortableTensor::_info, and onert::ir::OperandInfo::shape().

Referenced by applyShape().

The documentation for this class was generated from the following files:

- runtime/onert/core/src/backend/builtin/UserTensor.h

- runtime/onert/core/src/backend/builtin/UserTensor.cc