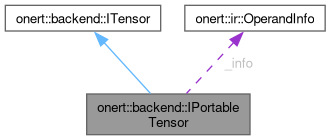

A tensor class that is portable for other backends. More...

#include <IPortableTensor.h>

Public Member Functions | |

| IPortableTensor (const ir::OperandInfo &info) | |

| virtual | ~IPortableTensor () |

| const ir::OperandInfo & | get_info () const |

| const ir::Sparsity * | sparsity () const |

| size_t | total_size () const override final |

| size_t | calcOffset (const ir::Coordinates &coords) const override final |

| ir::DataType | data_type () const override final |

| float | data_scale () const override final |

| int32_t | data_zero_point () const override final |

| const std::vector< float > & | data_scales () const override final |

| const std::vector< int32_t > & | data_zero_points () const override |

| bool | is_constant () const override final |

| Return true if the tensor is constant. | |

| bool | is_dynamic () const override final |

| Return true if the tensor needs dynamic allocation, meaning that during compile-time the outpus shape cannot be known and the output shape is calculated during kernel execution-time. | |

| ir::Shape | getShape () const override final |

| Get ir::Shape of tensor. | |

| bool | has_padding () const final |

| void | access (const std::function< void(ITensor &tensor)> &fn) final |

Public Member Functions inherited from onert::backend::ITensor Public Member Functions inherited from onert::backend::ITensor | |

| virtual | ~ITensor () |

| virtual uint8_t * | buffer () const =0 |

| virtual bool | applyShape (const ir::Shape &) |

Set the shape to shape and possibly re-allocate the buffer. | |

| virtual void | set_dynamic () |

| set this tensor dynamic | |

| virtual void | deallocBuffer () |

| Dealloc the buffer (only for dynamic tensors) | |

| virtual void | setShape (const ir::Shape &) |

| Set the shape of tenser to new_shape. | |

| virtual bool | is_subtensor () const |

| virtual bool | needMemoryMap () const |

| virtual void | enqueueWriteBuffer (const void *, bool) |

| virtual void | enqueueReadBuffer (void *, bool) |

Protected Attributes | |

| ir::OperandInfo | _info |

Detailed Description

A tensor class that is portable for other backends.

Backends that use derivatives of this interface can reuse each other's tensors without copying. Here's criterion to be a portable tensor:

- it must not have any paddings

- No special operations on

accessmethod- e.g. CL memory must map/unmap to use it from CPU, the memory so it cannot be portable

Definition at line 36 of file IPortableTensor.h.

Constructor & Destructor Documentation

◆ IPortableTensor()

|

inline |

Definition at line 39 of file IPortableTensor.h.

◆ ~IPortableTensor()

|

virtual |

Definition at line 24 of file IPortableTensor.cc.

Member Function Documentation

◆ access()

|

inlinefinalvirtual |

Implements onert::backend::ITensor.

Definition at line 68 of file IPortableTensor.h.

◆ calcOffset()

|

finaloverridevirtual |

Implements onert::backend::ITensor.

Definition at line 26 of file IPortableTensor.cc.

References _info, coords, data_type(), offset(), and onert::ir::OperandInfo::shape().

◆ data_scale()

|

inlinefinaloverridevirtual |

Implements onert::backend::ITensor.

Definition at line 55 of file IPortableTensor.h.

References _info, onert::ir::TypeInfo::scale(), and onert::ir::OperandInfo::typeInfo().

Referenced by onert::backend::cpu::ops::ConcatLayer::concatenationQuant8(), onert::backend::cpu::ops::SoftMaxLayer::configure(), onert::backend::cpu::ops::FullyConnectedLayer::fullyConnectedHybrid(), onert::backend::cpu::ops::LogSoftMaxLayer::logsoftmaxQuant8(), onert::backend::cpu::ops::MeanLayer::MeanQuant8(), onert::backend::cpu::ops::ElementwiseActivationLayer::PopulateLookupTable(), onert::backend::cpu::ops::LogSoftMaxLayer::PopulateLookupTable(), onert::backend::cpu::ops::ConvolutionLayer::prepare(), onert::backend::cpu::ops::SoftMaxLayer::softmaxQuant8(), and onert::backend::cpu::ops::TransposeLayer::transposeQuant8().

◆ data_scales()

|

inlinefinaloverridevirtual |

Implements onert::backend::ITensor.

Definition at line 57 of file IPortableTensor.h.

References _info, onert::ir::TypeInfo::scales(), and onert::ir::OperandInfo::typeInfo().

Referenced by onert::backend::cpu::ops::DepthwiseConvolutionLayer::configure(), onert::backend::cpu::ops::DepthwiseConvolutionLayer::convQ8iHybridPerChannel(), onert::backend::cpu::ops::ConvolutionLayer::prepare(), onert::backend::cpu::ops::ConvolutionLayer::run(), and onert::backend::cpu::ops::DepthwiseConvolutionLayer::run().

◆ data_type()

|

inlinefinaloverridevirtual |

Implements onert::backend::ITensor.

Definition at line 54 of file IPortableTensor.h.

References _info, onert::ir::TypeInfo::type(), and onert::ir::OperandInfo::typeInfo().

Referenced by onert::backend::builtin::UserTensor::applyShape(), onert::exec::EdgeTensor::applyShape(), onert::backend::train::ops::BinaryArithmeticLayer::backward(), onert::backend::train::ops::ConvolutionLayer::backward(), onert::backend::train::ops::DepthwiseConvolutionLayer::backward(), onert::backend::train::ops::FullyConnectedLayer::backward(), onert::backend::train::ops::LossCategoricalCrossentropyLayer::backward(), onert::backend::train::ops::LossMeanSquaredErrorLayer::backward(), onert::backend::train::ops::MeanLayer::backward(), onert::backend::train::ops::PadLayer::backward(), onert::backend::train::ops::SoftMaxLayer::backward(), calcOffset(), onert::backend::cpu::ops::LogSoftMaxLayer::configure(), onert::backend::cpu::ops::SoftMaxLayer::configure(), onert::backend::cpu::ops::MeanLayer::configure(), onert::backend::cpu::ops::ReduceLayer::configure(), onert::backend::cpu::ops::DepthwiseConvolutionLayer::configure(), onert::backend::cpu::ops::ConvolutionLayer::configure(), onert::backend::cpu::ops::FullyConnectedLayer::configure(), onert::backend::cpu::ops::PoolLayer::configure(), onert::backend::cpu::ops::QuantizeLayer::configure(), onert::backend::cpu::ops::ElementwiseActivationLayer::configure(), onert::backend::cpu::ops::ElementwiseBinaryLayer::configure(), onert::backend::cpu::ops::BinaryArithmeticLayer::configure(), onert::backend::train::ops::DepthwiseConvolutionLayer::configureBackward(), onert::backend::train::ops::PadLayer::depad(), onert::backend::train::ops::LossCategoricalCrossentropyLayer::forward(), onert::backend::train::ops::LossMeanSquaredErrorLayer::forward(), onert::backend::cpu::ops::getReducerAxes(), onert::backend::cpu::ops::PadLayer::padImpl(), onert::backend::cpu::ops::ConvolutionLayer::prepare(), onert::backend::cpu::ops::FullyConnectedLayer::prepare(), onert::backend::ruy::ops::ConvolutionLayer::prepare(), onert::backend::cpu::ops::LogSoftMaxLayer::run(), onert::backend::cpu::ops::AddNLayer::run(), onert::backend::cpu::ops::ArgMinMaxLayer::run(), onert::backend::cpu::ops::AttentionLayer::run(), onert::backend::cpu::ops::BatchMatMulLayer::run(), onert::backend::cpu::ops::BatchToSpaceNDLayer::run(), onert::backend::cpu::ops::BroadcastToLayer::run(), onert::backend::cpu::ops::CompareLayer::run(), onert::backend::cpu::ops::ConcatLayer::run(), onert::backend::cpu::ops::ConvolutionLayer::run(), onert::backend::cpu::ops::DepthToSpaceLayer::run(), onert::backend::cpu::ops::DepthwiseConvolutionLayer::run(), onert::backend::cpu::ops::DynamicUpdateSliceLayer::run(), onert::backend::cpu::ops::QuantizeLayer::run(), onert::backend::cpu::ops::FillLayer::run(), onert::backend::cpu::ops::FullyConnectedLayer::run(), onert::backend::cpu::ops::FusedBatchNormLayer::run(), onert::backend::cpu::ops::GatherLayer::run(), onert::backend::cpu::ops::L2NormLayer::run(), onert::backend::cpu::ops::LSTMLayer::run(), onert::backend::cpu::ops::OneHotLayer::run(), onert::backend::cpu::ops::PackLayer::run(), onert::backend::cpu::ops::PadLayer::run(), onert::backend::cpu::ops::PowLayer::run(), onert::backend::cpu::ops::RangeLayer::run(), onert::backend::cpu::ops::ReduceLayer::run(), onert::backend::cpu::ops::MeanLayer::run(), onert::backend::cpu::ops::ResizeBilinearLayer::run(), onert::backend::cpu::ops::ReverseLayer::run(), onert::backend::cpu::ops::RmsNormLayer::run(), onert::backend::cpu::ops::RoPELayer::run(), onert::backend::cpu::ops::SelectLayer::run(), onert::backend::cpu::ops::ShapeLayer::run(), onert::backend::cpu::ops::SliceLayer::run(), onert::backend::cpu::ops::SoftMaxLayer::run(), onert::backend::cpu::ops::SpaceToBatchNDLayer::run(), onert::backend::cpu::ops::SpaceToDepthLayer::run(), onert::backend::cpu::ops::SplitLayer::run(), onert::backend::cpu::ops::SplitVLayer::run(), onert::backend::cpu::ops::SqDiffLayer::run(), onert::backend::cpu::ops::StatelessRandomUniformLayer::run(), onert::backend::cpu::ops::StridedSliceLayer::run(), onert::backend::cpu::ops::TileLayer::run(), onert::backend::cpu::ops::TopKV2Layer::run(), onert::backend::cpu::ops::TransposeLayer::run(), onert::backend::cpu::ops::UnpackLayer::run(), onert::backend::ggml::ops::FullyConnectedLayer::run(), onert::backend::ggml::ops::GatherLayer::run(), onert::backend::ruy::ops::ConvolutionLayer::run(), onert::backend::ruy::ops::FullyConnectedLayer::run(), onert::backend::cpu::ops::SplitLayer::split(), and onert::backend::cpu::ops::SplitVLayer::splitV().

◆ data_zero_point()

|

inlinefinaloverridevirtual |

Implements onert::backend::ITensor.

Definition at line 56 of file IPortableTensor.h.

References _info, onert::ir::OperandInfo::typeInfo(), and onert::ir::TypeInfo::zero_point().

Referenced by onert::backend::cpu::ops::ConcatLayer::concatenationQuant8(), onert::backend::cpu::ops::DepthwiseConvolutionLayer::convQ8i(), onert::backend::cpu::ops::DepthwiseConvolutionLayer::convQ8uPerChannel(), onert::backend::cpu::ops::DepthwiseConvolutionLayer::convQ8uPerTensor(), onert::backend::cpu::ops::FullyConnectedLayer::fullyConnectedQuant8(), onert::backend::cpu::ops::LogSoftMaxLayer::logsoftmaxQuant8(), onert::backend::cpu::ops::MeanLayer::MeanQuant8(), onert::backend::cpu::ops::ElementwiseActivationLayer::PopulateLookupTable(), onert::backend::cpu::ops::QuantizeLayer::run(), onert::backend::cpu::ops::L2NormLayer::run(), onert::backend::cpu::ops::PadLayer::run(), onert::backend::cpu::ops::SoftMaxLayer::softmaxQuant8(), and onert::backend::cpu::ops::TransposeLayer::transposeQuant8().

◆ data_zero_points()

|

inlineoverridevirtual |

Implements onert::backend::ITensor.

Definition at line 58 of file IPortableTensor.h.

References _info, onert::ir::OperandInfo::typeInfo(), and onert::ir::TypeInfo::zero_points().

Referenced by onert::backend::cpu::ops::DepthwiseConvolutionLayer::convQ8uPerChannel().

◆ get_info()

|

inline |

Definition at line 48 of file IPortableTensor.h.

References _info.

Referenced by onert::exec::EdgeTensor::applyShape(), onert::backend::train::ops::FullyConnectedLayer::configureBackward(), onert::backend::train::ops::ConvolutionLayer::configureBackward(), onert::backend::train::ops::DepthwiseConvolutionLayer::configureBackward(), onert::backend::train::ops::BinaryArithmeticLayer::configureBackward(), and onert::backend::builtin::IOTensor::syncInfoFromBackendTensor().

◆ getShape()

|

inlinefinaloverridevirtual |

Get ir::Shape of tensor.

- Note

- Higer dimension will be placed on front.

Implements onert::backend::ITensor.

Definition at line 64 of file IPortableTensor.h.

References _info, and onert::ir::OperandInfo::shape().

Referenced by onert::backend::train::ops::BackPropAccumulator::BackPropAccumulator(), onert::backend::train::ops::PadLayer::depad(), onert::backend::cpu::ops::getReducerAxes(), onert::backend::cpu::ops::HaveSameShapes(), onert::backend::cpu::ops::LSTMLayer::LSTMFloat(), onert::backend::cpu::ops::PadLayer::padImpl(), onert::backend::cpu::ops::ArgMinMaxLayer::run(), onert::backend::cpu::ops::ConvolutionLayer::run(), onert::backend::cpu::ops::DynamicUpdateSliceLayer::run(), onert::backend::cpu::ops::RankLayer::run(), onert::backend::cpu::ops::ReduceLayer::run(), onert::backend::cpu::ops::ReverseLayer::run(), onert::backend::cpu::ops::SelectLayer::run(), onert::backend::ruy::ops::ConvolutionLayer::run(), onert::backend::cpu::ops::SplitLayer::split(), onert::backend::cpu::ops::SplitVLayer::splitV(), and onert::backend::cpu::ops::TransposeLayer::transpose().

◆ has_padding()

|

inlinefinalvirtual |

Implements onert::backend::ITensor.

Definition at line 67 of file IPortableTensor.h.

◆ is_constant()

|

inlinefinaloverridevirtual |

Return true if the tensor is constant.

Reimplemented from onert::backend::ITensor.

Definition at line 62 of file IPortableTensor.h.

References _info, and onert::ir::OperandInfo::isConstant().

Referenced by onert::backend::cpu::ops::BatchMatMulLayer::batchMatMulFloat32(), onert::backend::cpu::ops::DepthwiseConvolutionLayer::configure(), onert::backend::cpu::ops::FullyConnectedLayer::fullyConnectedFloat32(), onert::backend::ruy::ops::FullyConnectedLayer::fullyConnectedFloat32(), onert::backend::cpu::ops::FullyConnectedLayer::prepare(), onert::backend::ruy::ops::ConvolutionLayer::prepare(), and onert::backend::ruy::ops::FullyConnectedLayer::prepare().

◆ is_dynamic()

|

inlinefinaloverridevirtual |

Return true if the tensor needs dynamic allocation, meaning that during compile-time the outpus shape cannot be known and the output shape is calculated during kernel execution-time.

Implements onert::backend::ITensor.

Definition at line 63 of file IPortableTensor.h.

References _info, and onert::ir::OperandInfo::isDynamic().

Referenced by onert::exec::EdgeTensor::applyShape(), onert::backend::cpu::ops::DepthwiseConvolutionLayer::configure(), onert::backend::basic::Tensor::increase_ref(), onert::backend::cpu::ops::ConvolutionLayer::prepare(), onert::backend::cpu::ops::FullyConnectedLayer::prepare(), onert::backend::cpu::ops::ConvolutionLayer::run(), onert::backend::ruy::ops::ConvolutionLayer::run(), and onert::backend::builtin::IOTensor::syncInfoFromBackendTensor().

◆ sparsity()

|

inline |

Definition at line 49 of file IPortableTensor.h.

References _info, onert::ir::TypeInfo::sparsity(), and onert::ir::OperandInfo::typeInfo().

Referenced by onert::backend::cpu::ops::FullyConnectedLayer::fullyConnectedSparseWeight(), and onert::backend::cpu::ops::FullyConnectedLayer::run().

◆ total_size()

|

inlinefinaloverridevirtual |

Implements onert::backend::ITensor.

Definition at line 52 of file IPortableTensor.h.

References _info, and onert::ir::OperandInfo::total_size().

Referenced by onert::exec::EdgeTensor::allocate_buffer(), onert::exec::EdgeTensor::applyShape(), onert::backend::train::ops::FullyConnectedLayer::configureBackward(), onert::backend::train::ops::BinaryArithmeticLayer::configureBackward(), onert::backend::basic::train::TrainableTensor::fillBuffer(), onert::backend::cpu::ops::LSTMLayer::LSTMFloat(), onert::backend::cpu::ops::ReshapeLayer::reshapeGeneric(), onert::backend::cpu::ops::ArgMinMaxLayer::run(), onert::backend::cpu::ops::ExpandDimsLayer::run(), onert::backend::cpu::ops::ReverseLayer::run(), onert::backend::cpu::ops::SplitLayer::split(), and onert::backend::cpu::ops::SplitVLayer::splitV().

Field Documentation

◆ _info

|

protected |

Definition at line 71 of file IPortableTensor.h.

Referenced by onert::exec::EdgeTensor::allocate_buffer(), onert::backend::basic::Tensor::applyShape(), onert::backend::builtin::IOTensor::applyShape(), calcOffset(), data_scale(), data_scales(), data_type(), data_zero_point(), data_zero_points(), onert::backend::basic::ExternalTensor::ExternalTensor(), get_info(), getShape(), is_constant(), is_dynamic(), onert::backend::basic::Tensor::set_dynamic(), onert::backend::builtin::IOTensor::set_dynamic(), onert::backend::builtin::UserTensor::set_dynamic(), onert::exec::EdgeTensor::set_dynamic(), onert::backend::basic::Tensor::setShape(), onert::backend::builtin::UserTensor::setShape(), onert::exec::EdgeTensor::setShape(), onert::backend::builtin::IOTensor::setShape(), onert::backend::builtin::IOTensor::setTensor(), sparsity(), onert::backend::builtin::IOTensor::syncInfoFromBackendTensor(), and total_size().

The documentation for this class was generated from the following files:

- runtime/onert/core/include/backend/IPortableTensor.h

- runtime/onert/core/src/backend/IPortableTensor.cc