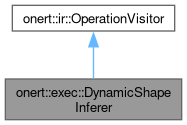

Class to infer shape of output tensor at execution time and allocate memory fo output tensor if needed. More...

#include <DynamicShapeInferer.h>

Detailed Description

Class to infer shape of output tensor at execution time and allocate memory fo output tensor if needed.

Definition at line 34 of file DynamicShapeInferer.h.

Constructor & Destructor Documentation

◆ DynamicShapeInferer()

|

inline |

Definition at line 37 of file DynamicShapeInferer.h.

Member Function Documentation

◆ visit() [1/46]

|

override |

Definition at line 93 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::operation::ArgMinMax::AXIS, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferArgMinMaxShape(), and onert::ir::operation::ArgMinMax::INPUT.

◆ visit() [2/46]

|

override |

Definition at line 118 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferBatchMatMulShape(), onert::ir::operation::BatchMatMul::LHS, onert::ir::operation::BatchMatMul::param(), and onert::ir::operation::BatchMatMul::RHS.

◆ visit() [3/46]

|

override |

Definition at line 139 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferBCQFullyConnectedShape(), onert::ir::operation::BCQFullyConnected::INPUT, and onert::ir::operation::BCQFullyConnected::WEIGHTS_CLUSTERS.

◆ visit() [4/46]

|

override |

Definition at line 168 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::operation::BCQGather::INDICES, onert::shape_inference::inferBCQGatherShape(), onert::ir::operation::BCQGather::INPUT_BINARY, onert::ir::operation::BCQGather::INPUT_CLUSTERS, and onert::ir::operation::BCQGather::param().

◆ visit() [5/46]

|

override |

Definition at line 200 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferBCQUnembeddingShape(), onert::ir::operation::BCQUnembedding::INPUT, and onert::ir::operation::BCQUnembedding::WEIGHTS_CLUSTERS.

◆ visit() [6/46]

|

override |

Definition at line 224 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::operation::BinaryArithmetic::LHS, and onert::ir::operation::BinaryArithmetic::RHS.

◆ visit() [7/46]

|

override |

Definition at line 230 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferBroadcastToShape(), onert::ir::operation::BroadcastTo::INPUT, onert::ir::operation::Tile::MULTIPLES, and output_shape.

◆ visit() [8/46]

|

override |

Definition at line 254 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::operation::Comparison::INPUT0, and onert::ir::operation::Comparison::INPUT1.

◆ visit() [9/46]

|

override |

Definition at line 260 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::operation::Concat::Param::axis, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::backend::ITensor::getShape(), onert::shape_inference::inferConcatShape(), output_shape, and onert::ir::operation::Concat::param().

◆ visit() [10/46]

|

override |

Definition at line 340 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferConv2DShape(), onert::ir::operation::Conv2D::INPUT, onert::ir::operation::Conv2D::KERNEL, output_shape, and onert::ir::operation::Conv2D::param().

◆ visit() [11/46]

|

override |

Definition at line 364 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferDepthwiseConv2DShape(), onert::ir::operation::DepthwiseConv2D::INPUT, onert::ir::operation::DepthwiseConv2D::KERNEL, output_shape, and onert::ir::operation::DepthwiseConv2D::param().

◆ visit() [12/46]

|

override |

Definition at line 653 of file DynamicShapeInferer.cc.

◆ visit() [13/46]

|

override |

Definition at line 389 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), and onert::ir::operation::DynamicUpdateSlice::OPERAND.

◆ visit() [14/46]

|

override |

Definition at line 395 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), and onert::ir::operation::ElementwiseActivation::INPUT.

◆ visit() [15/46]

|

override |

Definition at line 400 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::operation::ElementwiseBinary::LHS, and onert::ir::operation::ElementwiseBinary::RHS.

◆ visit() [16/46]

|

override |

Definition at line 406 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), and onert::ir::operation::ElementwiseUnary::INPUT.

◆ visit() [17/46]

|

override |

Definition at line 411 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::operation::ExpandDims::AXIS, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferExpandDimsShape(), onert::ir::operation::ExpandDims::INPUT, and output_shape.

◆ visit() [18/46]

|

override |

Definition at line 461 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), output_shape, and onert::ir::operation::Fill::SHAPE.

◆ visit() [19/46]

|

override |

Definition at line 489 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferFullyConnectedShape(), onert::ir::operation::FullyConnected::INPUT, onert::ir::operation::FullyConnected::Param::keep_num_dims, onert::ir::operation::FullyConnected::param(), and onert::ir::operation::FullyConnected::WEIGHT.

◆ visit() [20/46]

|

override |

Definition at line 513 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), and onert::ir::operation::FusedBatchNorm::INPUT.

◆ visit() [21/46]

|

override |

Definition at line 518 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::operation::Gather::Param::axis, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::operation::Gather::INDICES, onert::shape_inference::inferGatherShape(), onert::ir::operation::Gather::INPUT, and onert::ir::operation::Gather::param().

◆ visit() [22/46]

|

override |

Definition at line 545 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), and onert::ir::operation::L2Normalization::INPUT.

◆ visit() [23/46]

|

override |

Definition at line 550 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::operation::LSTM::CELL_STATE_OUT, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::operation::LSTM::INPUT, onert::ir::operation::LSTM::INPUT_TO_OUTPUT_WEIGHTS, onert::ir::operation::LSTM::OUTPUT, onert::ir::operation::LSTM::OUTPUT_STATE_OUT, onert::ir::operation::LSTM::param(), onert::ir::operation::LSTM::RECURRENT_TO_OUTPUT_WEIGHTS, onert::ir::operation::LSTM::SCRATCH_BUFFER, and onert::ir::operation::LSTM::Param::time_major.

◆ visit() [24/46]

|

override |

Definition at line 661 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::operation::OneHot::Param::axis, onert::ir::operation::OneHot::DEPTH, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::operation::OneHot::INDICES, onert::shape_inference::inferOnehotShape(), and onert::ir::operation::OneHot::param().

◆ visit() [25/46]

|

override |

Definition at line 687 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::operation::Pack::Param::axis, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferPackShape(), onert::ir::operation::Pack::Param::num, onert::ir::operation::Pack::param(), and onert::ir::OperandIndexSequence::size().

◆ visit() [26/46]

|

override |

Definition at line 723 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferPadShape(), onert::ir::operation::Pad::INPUT, output_shape, and onert::ir::operation::Pad::PAD.

◆ visit() [27/46]

|

override |

Definition at line 750 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::operation::Permute::getPermuteType(), and output_shape.

◆ visit() [28/46]

|

override |

Definition at line 769 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferPoolShape(), onert::ir::operation::Pool2D::INPUT, output_shape, and onert::ir::operation::Pool2D::param().

◆ visit() [29/46]

|

override |

Definition at line 789 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::operation::Pow::LHS, and onert::ir::operation::Pow::RHS.

◆ visit() [30/46]

|

override |

Definition at line 795 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::operation::Range::DELTA, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::operation::Range::LIMIT, and onert::ir::operation::Range::START.

◆ visit() [31/46]

|

override |

Definition at line 834 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::operation::Reduce::AXES, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferReduceShape(), onert::ir::operation::Reduce::INPUT, onert::ir::operation::Reduce::Param::keep_dims, onert::ir::operation::Reduce::name(), and onert::ir::operation::Reduce::param().

◆ visit() [32/46]

|

override |

Definition at line 878 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferReshapeShape(), onert::ir::operation::Reshape::INPUT, onert::ir::operation::Reshape::Param::new_shape, output_shape, onert::ir::operation::Reshape::param(), onert::ir::operation::Reshape::SHAPE, and onert::ir::OperandIndexSequence::size().

◆ visit() [33/46]

|

override |

Definition at line 955 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::operation::ResizeBilinear::Param::height_out, onert::shape_inference::inferResizeBilinearShape(), onert::ir::operation::Reshape::INPUT, output_shape, onert::ir::operation::ResizeBilinear::param(), onert::ir::OperandIndexSequence::size(), size, onert::ir::operation::ResizeBilinear::SIZE, and onert::ir::operation::ResizeBilinear::Param::width_out.

◆ visit() [34/46]

|

override |

Definition at line 1001 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), and onert::ir::operation::Reverse::INPUT.

◆ visit() [35/46]

|

override |

Definition at line 1006 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::operation::Select::CONDITION, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferSelectShape(), onert::ir::operation::Select::INPUT_FALSE, and onert::ir::operation::Select::INPUT_TRUE.

◆ visit() [36/46]

|

override |

Definition at line 1037 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), and output_shape.

◆ visit() [37/46]

|

override |

Definition at line 1056 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::operation::Slice::BEGINS, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferSliceShape(), onert::ir::operation::Slice::INPUT, and onert::ir::operation::Slice::SIZES.

◆ visit() [38/46]

|

override |

Definition at line 1082 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), and onert::ir::operation::Softmax::INPUT.

◆ visit() [39/46]

|

override |

Definition at line 1087 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::operation::SpaceToBatchND::BLOCK_SIZE, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferSpaceToBatchNDShape(), onert::ir::operation::SpaceToBatchND::INPUT, and onert::ir::operation::SpaceToBatchND::PADDINGS.

◆ visit() [40/46]

|

override |

Definition at line 1119 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::operation::Split::AXIS, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferSplitShape(), onert::ir::operation::Split::INPUT, onert::ir::operation::Split::Param::num_splits, and onert::ir::operation::Split::param().

◆ visit() [41/46]

|

override |

Definition at line 1159 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::operation::SquaredDifference::LHS, and onert::ir::operation::SquaredDifference::RHS.

◆ visit() [42/46]

|

override |

Definition at line 1165 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferSqueezeShape(), onert::ir::operation::Squeeze::INPUT, and onert::ir::operation::Squeeze::param().

◆ visit() [43/46]

|

override |

Definition at line 1187 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::operation::StridedSlice::Param::begin_mask, onert::shape_inference::buildStridedSliceParams(), onert::ir::operation::StridedSlice::Param::end_mask, onert::ir::operation::StridedSlice::ENDS, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferStridedSliceShape(), onert::ir::operation::StridedSlice::INPUT, output_shape, onert::ir::operation::StridedSlice::param(), onert::ir::operation::StridedSlice::Param::shrink_axis_mask, onert::ir::operation::StridedSlice::STARTS, and onert::ir::operation::StridedSlice::STRIDES.

◆ visit() [44/46]

|

override |

Definition at line 1227 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferTileShape(), onert::ir::operation::Tile::INPUT, onert::ir::operation::Tile::MULTIPLES, and output_shape.

◆ visit() [45/46]

|

override |

Definition at line 1254 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferTransposeShape(), onert::ir::operation::Transpose::INPUT, and onert::ir::operation::Transpose::PERMUTATION.

◆ visit() [46/46]

|

override |

Definition at line 1308 of file DynamicShapeInferer.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::operation::Unpack::Param::axis, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::shape_inference::inferUnpackShape(), onert::ir::operation::Unpack::Param::num, and onert::ir::operation::Unpack::param().

The documentation for this class was generated from the following files:

- runtime/onert/core/include/exec/DynamicShapeInferer.h

- runtime/onert/core/src/exec/DynamicShapeInferer.cc

Public Member Functions inherited from

Public Member Functions inherited from