#include <SoftmaxLayer.h>

Public Member Functions | |

| SoftMaxLayer () | |

| void | softmaxFloat32 () |

| template<typename T > | |

| void | softmaxQuant8 () |

| void | configure (const IPortableTensor *input, const float beta, IPortableTensor *output) |

| void | run () override |

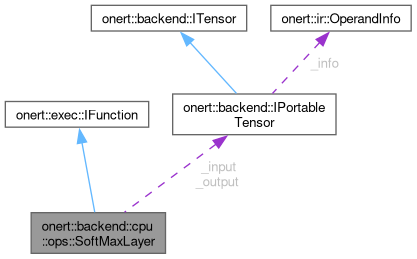

Public Member Functions inherited from onert::exec::IFunction Public Member Functions inherited from onert::exec::IFunction | |

| virtual | ~IFunction ()=default |

| virtual void | prepare () |

Protected Attributes | |

| const IPortableTensor * | _input |

| IPortableTensor * | _output |

Detailed Description

Definition at line 27 of file SoftmaxLayer.h.

Constructor & Destructor Documentation

◆ SoftMaxLayer()

| onert::backend::cpu::ops::SoftMaxLayer::SoftMaxLayer | ( | ) |

Definition at line 52 of file SoftmaxLayer.cc.

Member Function Documentation

◆ configure()

| void onert::backend::cpu::ops::SoftMaxLayer::configure | ( | const IPortableTensor * | input, |

| const float | beta, | ||

| IPortableTensor * | output | ||

| ) |

Definition at line 108 of file SoftmaxLayer.cc.

References _input, _output, onert::backend::IPortableTensor::data_scale(), onert::backend::IPortableTensor::data_type(), and nnfw::cker::PopulateSoftmaxLookupTable().

◆ run()

|

overridevirtual |

Implements onert::exec::IFunction.

Definition at line 129 of file SoftmaxLayer.cc.

References _input, onert::backend::IPortableTensor::data_type(), and softmaxFloat32().

Referenced by onert::backend::train::ops::SoftMaxLayer::forward().

◆ softmaxFloat32()

| void onert::backend::cpu::ops::SoftMaxLayer::softmaxFloat32 | ( | ) |

Definition at line 57 of file SoftmaxLayer.cc.

References _input, _output, nnfw::cker::SoftmaxParams::beta, getNumberOfDimensions(), getNumberOfElements(), onert::backend::cpu::ops::getShape(), getSizeOfDimension(), nnfw::cker::Softmax(), and nnfw::cker::reference::Softmax().

Referenced by run().

◆ softmaxQuant8()

| void onert::backend::cpu::ops::SoftMaxLayer::softmaxQuant8 | ( | ) |

Definition at line 90 of file SoftmaxLayer.cc.

References _input, _output, onert::backend::IPortableTensor::data_scale(), onert::backend::IPortableTensor::data_zero_point(), onert::backend::cpu::ops::getShape(), nnfw::cker::SoftmaxParams::scale, nnfw::cker::SoftmaxParams::table, nnfw::cker::SoftmaxParams::uint8_table1, nnfw::cker::SoftmaxParams::uint8_table2, and nnfw::cker::SoftmaxParams::zero_point.

Field Documentation

◆ _input

|

protected |

Definition at line 42 of file SoftmaxLayer.h.

Referenced by onert::backend::train::ops::SoftMaxLayer::backward(), configure(), run(), softmaxFloat32(), and softmaxQuant8().

◆ _output

|

protected |

Definition at line 43 of file SoftmaxLayer.h.

Referenced by onert::backend::train::ops::SoftMaxLayer::backward(), configure(), softmaxFloat32(), and softmaxQuant8().

The documentation for this class was generated from the following files:

- runtime/onert/backend/cpu/ops/SoftmaxLayer.h

- runtime/onert/backend/cpu/ops/SoftmaxLayer.cc