Loading...

Searching...

No Matches

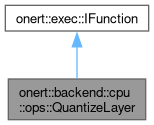

onert::backend::cpu::ops::QuantizeLayer Class Reference

#include <ElementwiseUnaryLayer.h>

Collaboration diagram for onert::backend::cpu::ops::QuantizeLayer:

Public Member Functions | |

| QuantizeLayer () | |

| void | configure (const IPortableTensor *input, IPortableTensor *output) |

| void | run () override |

Public Member Functions inherited from onert::exec::IFunction Public Member Functions inherited from onert::exec::IFunction | |

| virtual | ~IFunction ()=default |

| virtual void | prepare () |

Detailed Description

Definition at line 68 of file ElementwiseUnaryLayer.h.

Constructor & Destructor Documentation

◆ QuantizeLayer()

|

inline |

Definition at line 71 of file ElementwiseUnaryLayer.h.

71 : _input(nullptr), _output(nullptr), _output_multiplier(0), _output_shift(0)

72 {

73 // DO NOTHING

74 }

Member Function Documentation

◆ configure()

| void onert::backend::cpu::ops::QuantizeLayer::configure | ( | const IPortableTensor * | input, |

| IPortableTensor * | output | ||

| ) |

Definition at line 485 of file ElementwiseUnaryLayer.cc.

486{

487 assert(input != nullptr);

488 assert(output != nullptr);

489

490 _input = input;

491 _output = output;

492

494 {

495 // DO NOTHING

496 }

498 (output->data_type() == OperandType::QUANT_INT8_ASYMM)) ||

499 ((input->data_type() == OperandType::QUANT_INT8_ASYMM) &&

500 (output->data_type() == OperandType::QUANT_UINT8_ASYMM)))

501 {

502 const double effective_output_scale =

504 QuantizeMultiplier(effective_output_scale, &_output_multiplier, &_output_shift);

505 }

506 else

507 {

508 throw std::runtime_error{"Quantize: Unsupported data type"};

509 }

510}

ir::DataType data_type() const override final

Definition IPortableTensor.h:54

void QuantizeMultiplier(double double_multiplier, int32_t *quantized_multiplier, int *shift)

Definition OperationUtils.cc:56

References onert::backend::IPortableTensor::data_type(), and onert::backend::cpu::ops::QuantizeMultiplier().

◆ run()

|

overridevirtual |

Implements onert::exec::IFunction.

Definition at line 512 of file ElementwiseUnaryLayer.cc.

513{

515 {

517 affineQuantize<float, uint8_t>(_input, _output);

519 affineQuantize<float, int16_t>(_input, _output);

520 else

521 throw std::runtime_error{"Quantize: Unsupported data type"};

522 }

524 (_output->data_type() == OperandType::QUANT_INT8_ASYMM))

525 {

529 getBuffer<int8_t>(_output));

530 }

532 (_output->data_type() == OperandType::QUANT_UINT8_ASYMM))

533 {

537 getBuffer<uint8_t>(_output));

538 }

539 else

540 {

541 throw std::runtime_error{"Quantize: Unsupported data type"};

542 }

543}

int MatchingFlatSize(const Dims< N > &dims, const Dims< N > &check_dims_0)

Definition Dims.h:108

int32_t data_zero_point() const override final

Definition IPortableTensor.h:56

void Requantize< int8_t, uint8_t >(const int8_t *input_data, int32_t size, int32_t effective_scale_multiplier, int32_t effective_scale_shift, int32_t input_zeropoint, int32_t output_zeropoint, uint8_t *output_data)

Definition Quantize.h:379

void Requantize< uint8_t, int8_t >(const uint8_t *input_data, int32_t size, int32_t effective_scale_multiplier, int32_t effective_scale_shift, int32_t input_zeropoint, int32_t output_zeropoint, int8_t *output_data)

Definition Quantize.h:311

nnfw::cker::Shape getShape(const IPortableTensor *tensor)

Definition OperationUtils.h:89

References onert::backend::IPortableTensor::data_type(), onert::backend::IPortableTensor::data_zero_point(), onert::backend::cpu::ops::getShape(), MatchingFlatSize(), nnfw::cker::Requantize< int8_t, uint8_t >(), and nnfw::cker::Requantize< uint8_t, int8_t >().

The documentation for this class was generated from the following files:

- runtime/onert/backend/cpu/ops/ElementwiseUnaryLayer.h

- runtime/onert/backend/cpu/ops/ElementwiseUnaryLayer.cc