#include <FullyConnectedLayer.h>

Public Member Functions | |

| FullyConnectedLayer () | |

| ~FullyConnectedLayer () | |

| void | fullyConnectedFloat32 () |

| void | fullyConnectedQuant8 () |

| void | fullyConnectedHybrid () |

| void | fullyConnectedSparseWeight () |

| void | fullyConnectedGGMLWeight () |

| void | fullyConnected16x1Float32 () |

| void | configure (const IPortableTensor *input, const IPortableTensor *weights, const IPortableTensor *bias, ir::Activation activation, ir::FullyConnectedWeightsFormat weights_format, IPortableTensor *output, const std::shared_ptr< ExternalContext > &external_context) |

| void | run () override |

| void | prepare () override |

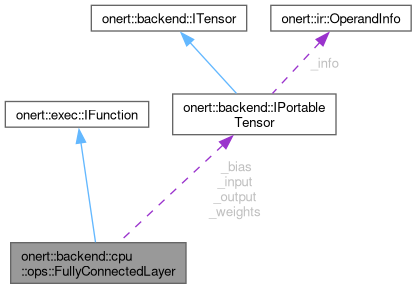

Public Member Functions inherited from onert::exec::IFunction Public Member Functions inherited from onert::exec::IFunction | |

| virtual | ~IFunction ()=default |

Protected Attributes | |

| const IPortableTensor * | _input |

| const IPortableTensor * | _weights |

| const IPortableTensor * | _bias |

| IPortableTensor * | _output |

| ir::Activation | _activation |

| std::unique_ptr< nnfw::cker::FCTempArena > | _temp_arena |

| std::shared_ptr< ExternalContext > | _external_context |

| bool | _is_hybrid: 1 |

| bool | _is_shuffled16x1float32: 1 |

Detailed Description

Definition at line 34 of file FullyConnectedLayer.h.

Constructor & Destructor Documentation

◆ FullyConnectedLayer()

| onert::backend::cpu::ops::FullyConnectedLayer::FullyConnectedLayer | ( | ) |

Definition at line 75 of file FullyConnectedLayer.cc.

◆ ~FullyConnectedLayer()

|

default |

Member Function Documentation

◆ configure()

| void onert::backend::cpu::ops::FullyConnectedLayer::configure | ( | const IPortableTensor * | input, |

| const IPortableTensor * | weights, | ||

| const IPortableTensor * | bias, | ||

| ir::Activation | activation, | ||

| ir::FullyConnectedWeightsFormat | weights_format, | ||

| IPortableTensor * | output, | ||

| const std::shared_ptr< ExternalContext > & | external_context | ||

| ) |

Definition at line 240 of file FullyConnectedLayer.cc.

References _activation, _bias, _external_context, _input, _is_hybrid, _is_shuffled16x1float32, _output, _weights, onert::backend::IPortableTensor::data_type(), and onert::ir::Shuffled16x1Float32.

◆ fullyConnected16x1Float32()

| void onert::backend::cpu::ops::FullyConnectedLayer::fullyConnected16x1Float32 | ( | ) |

Definition at line 222 of file FullyConnectedLayer.cc.

References _activation, _bias, _input, _output, _weights, nnfw::cker::FullyConnectedParams::activation, onert::util::CalculateActivationRange(), onert::backend::cpu::ops::convertActivationType(), and onert::backend::cpu::ops::getShape().

Referenced by run().

◆ fullyConnectedFloat32()

| void onert::backend::cpu::ops::FullyConnectedLayer::fullyConnectedFloat32 | ( | ) |

Definition at line 85 of file FullyConnectedLayer.cc.

References _activation, _bias, _input, _output, _weights, nnfw::cker::FullyConnectedParams::activation, onert::util::CalculateActivationRange(), onert::backend::cpu::ops::convertActivationType(), nnfw::cker::FullyConnectedParams::float_activation_max, nnfw::cker::FullyConnectedParams::float_activation_min, nnfw::cker::FullyConnected(), onert::backend::cpu::ops::getShape(), onert::backend::IPortableTensor::is_constant(), nnfw::cker::FullyConnectedParams::lhs_cacheable, and nnfw::cker::FullyConnectedParams::rhs_cacheable.

Referenced by run().

◆ fullyConnectedGGMLWeight()

| void onert::backend::cpu::ops::FullyConnectedLayer::fullyConnectedGGMLWeight | ( | ) |

◆ fullyConnectedHybrid()

| void onert::backend::cpu::ops::FullyConnectedLayer::fullyConnectedHybrid | ( | ) |

Definition at line 134 of file FullyConnectedLayer.cc.

References _activation, _bias, _external_context, _input, _output, _temp_arena, _weights, nnfw::cker::FullyConnectedParams::activation, onert::backend::cpu::ops::convertActivationType(), onert::backend::IPortableTensor::data_scale(), nnfw::cker::Shape::FlatSize(), nnfw::cker::FullyConnectedHybrid(), onert::backend::cpu::ops::getShape(), nnfw::cker::IsZeroVector(), nnfw::cker::FCTempArena::prepare(), nnfw::cker::FCTempArena::prepared, and nnfw::cker::FullyConnectedParams::weights_scale.

Referenced by run().

◆ fullyConnectedQuant8()

| void onert::backend::cpu::ops::FullyConnectedLayer::fullyConnectedQuant8 | ( | ) |

Definition at line 107 of file FullyConnectedLayer.cc.

References _activation, _bias, _input, _output, _weights, onert::backend::cpu::ops::CalculateActivationRangeQuantized(), onert::backend::IPortableTensor::data_zero_point(), nnfw::cker::FullyConnected(), onert::backend::cpu::ops::GetQuantizedConvolutionMultiplier(), onert::backend::cpu::ops::getShape(), nnfw::cker::FullyConnectedParams::input_offset, nnfw::cker::FullyConnectedParams::output_multiplier, nnfw::cker::FullyConnectedParams::output_offset, nnfw::cker::FullyConnectedParams::output_shift, nnfw::cker::FullyConnectedParams::quantized_activation_max, nnfw::cker::FullyConnectedParams::quantized_activation_min, onert::backend::cpu::ops::QuantizeMultiplier(), and nnfw::cker::FullyConnectedParams::weights_offset.

Referenced by run().

◆ fullyConnectedSparseWeight()

| void onert::backend::cpu::ops::FullyConnectedLayer::fullyConnectedSparseWeight | ( | ) |

Definition at line 195 of file FullyConnectedLayer.cc.

References _activation, _bias, _input, _output, _weights, nnfw::cker::FullyConnectedParams::activation, onert::ir::Sparsity::block_size(), onert::backend::cpu::ops::convertActivationType(), nnfw::cker::FullyConnectedSparseWeight16x1(), nnfw::cker::FullyConnectedSparseWeightRandom(), onert::backend::cpu::ops::getShape(), onert::backend::IPortableTensor::sparsity(), onert::ir::Sparsity::w1_indices(), and onert::ir::Sparsity::w1_segments().

Referenced by run().

◆ prepare()

|

overridevirtual |

Reimplemented from onert::exec::IFunction.

Definition at line 288 of file FullyConnectedLayer.cc.

References _bias, _input, _weights, onert::backend::ITensor::buffer(), onert::backend::IPortableTensor::data_type(), nnfw::cker::Shape::Dims(), nnfw::cker::Shape::FlatSize(), onert::backend::cpu::ops::getShape(), onert::backend::IPortableTensor::is_constant(), onert::backend::IPortableTensor::is_dynamic(), and nnfw::cker::IsZeroVector().

◆ run()

|

overridevirtual |

Implements onert::exec::IFunction.

Definition at line 264 of file FullyConnectedLayer.cc.

References _input, _is_hybrid, _is_shuffled16x1float32, _weights, onert::backend::IPortableTensor::data_type(), fullyConnected16x1Float32(), fullyConnectedFloat32(), fullyConnectedHybrid(), fullyConnectedQuant8(), fullyConnectedSparseWeight(), and onert::backend::IPortableTensor::sparsity().

Referenced by onert::backend::train::ops::FullyConnectedLayer::forward().

Field Documentation

◆ _activation

|

protected |

Definition at line 68 of file FullyConnectedLayer.h.

Referenced by configure(), fullyConnected16x1Float32(), fullyConnectedFloat32(), fullyConnectedHybrid(), fullyConnectedQuant8(), and fullyConnectedSparseWeight().

◆ _bias

|

protected |

Definition at line 65 of file FullyConnectedLayer.h.

Referenced by configure(), fullyConnected16x1Float32(), fullyConnectedFloat32(), fullyConnectedHybrid(), fullyConnectedQuant8(), fullyConnectedSparseWeight(), and prepare().

◆ _external_context

|

protected |

Definition at line 71 of file FullyConnectedLayer.h.

Referenced by configure(), and fullyConnectedHybrid().

◆ _input

|

protected |

Definition at line 63 of file FullyConnectedLayer.h.

Referenced by onert::backend::train::ops::FullyConnectedLayer::backward(), configure(), fullyConnected16x1Float32(), fullyConnectedFloat32(), fullyConnectedHybrid(), fullyConnectedQuant8(), fullyConnectedSparseWeight(), prepare(), and run().

◆ _is_hybrid

|

protected |

Definition at line 73 of file FullyConnectedLayer.h.

Referenced by configure(), and run().

◆ _is_shuffled16x1float32

|

protected |

Definition at line 74 of file FullyConnectedLayer.h.

Referenced by configure(), and run().

◆ _output

|

protected |

Definition at line 66 of file FullyConnectedLayer.h.

Referenced by configure(), fullyConnected16x1Float32(), fullyConnectedFloat32(), fullyConnectedHybrid(), fullyConnectedQuant8(), and fullyConnectedSparseWeight().

◆ _temp_arena

|

protected |

Definition at line 69 of file FullyConnectedLayer.h.

Referenced by fullyConnectedHybrid().

◆ _weights

|

protected |

Definition at line 64 of file FullyConnectedLayer.h.

Referenced by configure(), fullyConnected16x1Float32(), fullyConnectedFloat32(), fullyConnectedHybrid(), fullyConnectedQuant8(), fullyConnectedSparseWeight(), prepare(), and run().

The documentation for this class was generated from the following files:

- runtime/onert/backend/cpu/ops/FullyConnectedLayer.h

- runtime/onert/backend/cpu/ops/FullyConnectedLayer.cc