|

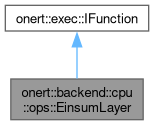

ONE - On-device Neural Engine

|

#include <EinsumLayer.h>

Public Member Functions | |

| EinsumLayer () | |

| ~EinsumLayer () | |

| void | einsumFloat32 () |

| void | configure (const std::vector< const IPortableTensor * > &inputs, std::string equation, IPortableTensor *output) |

| void | run () override |

Public Member Functions inherited from onert::exec::IFunction Public Member Functions inherited from onert::exec::IFunction | |

| virtual | ~IFunction ()=default |

| virtual void | prepare () |

Definition at line 35 of file EinsumLayer.h.

| onert::backend::cpu::ops::EinsumLayer::EinsumLayer | ( | ) |

Definition at line 24 of file EinsumLayer.cc.

|

default |

| void onert::backend::cpu::ops::EinsumLayer::configure | ( | const std::vector< const IPortableTensor * > & | inputs, |

| std::string | equation, | ||

| IPortableTensor * | output | ||

| ) |

Definition at line 63 of file EinsumLayer.cc.

| void onert::backend::cpu::ops::EinsumLayer::einsumFloat32 | ( | ) |

Definition at line 32 of file EinsumLayer.cc.

References onert::backend::cpu::ops::getShape(), and nnfw::cker::Einsum::prepare().

Referenced by run().

|

overridevirtual |

Implements onert::exec::IFunction.

Definition at line 51 of file EinsumLayer.cc.

References onert::backend::IPortableTensor::data_type(), and einsumFloat32().