Loading...

Searching...

No Matches

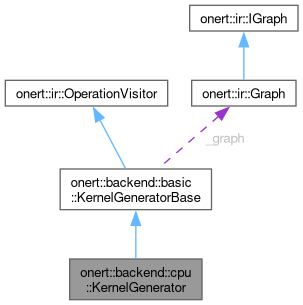

onert::backend::cpu::KernelGenerator Class Reference

#include <KernelGenerator.h>

Collaboration diagram for onert::backend::cpu::KernelGenerator:

Public Member Functions | |

| KernelGenerator (const ir::Graph &graph, const std::shared_ptr< TensorBuilder > &tensor_builder, const std::shared_ptr< basic::TensorRegistry > &tensor_reg, const std::shared_ptr< ExternalContext > &external_context) | |

| std::unique_ptr< exec::FunctionSequence > | generate (ir::OperationIndex op_ind) override |

Public Member Functions inherited from onert::backend::basic::KernelGeneratorBase Public Member Functions inherited from onert::backend::basic::KernelGeneratorBase | |

| virtual | ~KernelGeneratorBase ()=default |

| KernelGeneratorBase (const ir::Graph &graph) | |

Public Member Functions inherited from onert::ir::OperationVisitor Public Member Functions inherited from onert::ir::OperationVisitor | |

| virtual | ~OperationVisitor ()=default |

Additional Inherited Members | |

Protected Member Functions inherited from onert::backend::basic::KernelGeneratorBase Protected Member Functions inherited from onert::backend::basic::KernelGeneratorBase | |

| std::unique_ptr< exec::IFunction > | releaseFunction () |

Protected Attributes inherited from onert::backend::basic::KernelGeneratorBase Protected Attributes inherited from onert::backend::basic::KernelGeneratorBase | |

| const ir::Graph & | _graph |

| std::unique_ptr< exec::IFunction > | _return_fn |

Detailed Description

Definition at line 32 of file KernelGenerator.h.

Constructor & Destructor Documentation

◆ KernelGenerator()

| onert::backend::cpu::KernelGenerator::KernelGenerator | ( | const ir::Graph & | graph, |

| const std::shared_ptr< TensorBuilder > & | tensor_builder, | ||

| const std::shared_ptr< basic::TensorRegistry > & | tensor_reg, | ||

| const std::shared_ptr< ExternalContext > & | external_context | ||

| ) |

Definition at line 27 of file KernelGenerator.cc.

Member Function Documentation

◆ generate()

|

overridevirtual |

Implements onert::backend::basic::KernelGeneratorBase.

Definition at line 37 of file KernelGenerator.cc.

38{

39 auto ret = std::make_unique<exec::FunctionSequence>();

40

41 assert(_tensor_builder->dynamicTensorManager());

42 assert(_tensor_reg);

43

44 // Prepare to handle dynamic tensors later

45 auto dyn_ctx = std::make_shared<exec::FunctionSequence::DynamicTensorCtx>();

46 {

47 dyn_ctx->op = &_operations_ctx.at(ind);

48 dyn_ctx->dynamic_shape_inferer = std::make_shared<exec::DynamicShapeInferer>(_tensor_reg);

49 }

50 ret->dynamic_tensor_ctx(dyn_ctx);

51

53 op.accept(*this);

55 ret->append(std::move(_return_fn));

56

58 {

60 if (tensor)

61 {

62 tensor->increase_ref();

63 }

64 }

65 return ret;

66}

std::unique_ptr< exec::IFunction > _return_fn

Definition KernelGeneratorBase.h:60

const ir::Graph & _graph

Definition KernelGeneratorBase.h:59

const Object & at(const Index &index) const

Get the object that is associated with the given index.

Definition ObjectManager.h:119

@ UNDEFINED

References onert::backend::basic::KernelGeneratorBase::_graph, onert::backend::basic::KernelGeneratorBase::_return_fn, onert::util::ObjectManager< Index, Object >::at(), onert::ir::Graph::operations(), and onert::ir::UNDEFINED.

The documentation for this class was generated from the following files:

- runtime/onert/backend/cpu/KernelGenerator.h

- runtime/onert/backend/cpu/KernelGenerator.cc