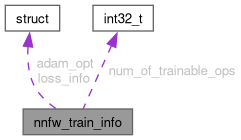

Training information to prepare training. More...

#include <onert-micro.h>

Data Fields | |

| float | learning_rate = 0.001f |

| uint32_t | batch_size = 1 |

| nnfw_loss_info | loss_info |

| NNFW_TRAIN_OPTIMIZER | opt = NNFW_TRAIN_OPTIMIZER_ADAM |

| uint32_t | num_trainble_ops = 0 |

| nnfw_adam_option | adam_opt |

| int32_t | num_of_trainable_ops = NNFW_TRAIN_TRAINABLE_NONE |

Detailed Description

Training information to prepare training.

Definition at line 186 of file onert-micro.h.

Field Documentation

◆ adam_opt

| nnfw_adam_option nnfw_train_info::adam_opt |

Definition at line 200 of file onert-micro.h.

◆ batch_size

| uint32_t nnfw_train_info::batch_size = 1 |

Batch size

Definition at line 191 of file onert-micro.h.

Referenced by onert.experimental.train.dataloader.DataLoader::__init__(), bind_nnfw_train_info(), and onert.experimental.train.dataloader.DataLoader::split().

◆ learning_rate

| float nnfw_train_info::learning_rate = 0.001f |

Learning rate

Definition at line 189 of file onert-micro.h.

Referenced by onert.experimental.train.optimizer.optimizer.Optimizer::__init__(), and bind_nnfw_train_info().

◆ loss_info

| nnfw_loss_info nnfw_train_info::loss_info |

loss info

loss info Note that you don't need to worry about whether the model you use does not include softmax when you try to use NNFW_TRAIN_LOSS_CATEGORICAL_CROSSENTROPY. Using NNFW_TRAIN_LOSS_CATEGORICAL_CROSSENTROPY will ensure that the predicted input of loss is the result of performing softmax once regardless of whether the output of the model is the result of softmax or not.

Definition at line 193 of file onert-micro.h.

Referenced by bind_nnfw_train_info().

◆ num_of_trainable_ops

| int32_t nnfw_train_info::num_of_trainable_ops = NNFW_TRAIN_TRAINABLE_NONE |

Number of layers to be trained from the back of the graph. Note that some values have special meaning. "-1" means that all layers will be trained. "0" means that no layer will be trained. Negative value less than -1 means error. The special values are collected in NNFW_TRAIN_NUM_OF_TRAINABLE_OPS_SPECIAL_VALUES enum.

Definition at line 335 of file nnfw_experimental.h.

Referenced by bind_nnfw_train_info().

◆ num_trainble_ops

| uint32_t nnfw_train_info::num_trainble_ops = 0 |

Definition at line 198 of file onert-micro.h.

◆ opt

| NNFW_TRAIN_OPTIMIZER nnfw_train_info::opt = NNFW_TRAIN_OPTIMIZER_ADAM |

optimizer type

Definition at line 196 of file onert-micro.h.

Referenced by bind_nnfw_train_info(), and CfgRunner.CfgRunner::run().

The documentation for this struct was generated from the following files:

- onert-micro/onert-micro/include/onert-micro.h

- runtime/onert/api/nnfw/include/nnfw_experimental.h