Public Member Functions | |

| None | __init__ (self, str path, str backends="cpu") |

| Union[List[np.ndarray], Tuple[List[np.ndarray], Dict[str, float]]] | infer (self, List[np.ndarray] inputs_array, *bool measure=False) |

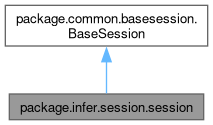

Public Member Functions inherited from package.common.basesession.BaseSession Public Member Functions inherited from package.common.basesession.BaseSession | |

| __getattr__ (self, name) | |

| List[tensorinfo] | get_inputs_tensorinfo (self) |

| List[tensorinfo] | get_outputs_tensorinfo (self) |

| set_inputs (self, size, inputs_array=[]) | |

Data Fields | |

| outputs | |

Data Fields inherited from package.common.basesession.BaseSession Data Fields inherited from package.common.basesession.BaseSession | |

| session | |

| inputs | |

| outputs | |

Protected Member Functions | |

| None | _update_inputs_tensorinfo (self, List[tensorinfo] new_infos) |

| _time_block (self, Dict[str, float] metrics, str key, bool measure) | |

Protected Member Functions inherited from package.common.basesession.BaseSession Protected Member Functions inherited from package.common.basesession.BaseSession | |

| _recreate_session (self, backend_session) | |

| _set_outputs (self, size) | |

Protected Attributes | |

| _prepared | |

Detailed Description

Class for inference using nnfw_session.

Definition at line 12 of file session.py.

Constructor & Destructor Documentation

◆ __init__()

| None package.infer.session.session.__init__ | ( | self, | |

| str | path, | ||

| str | backends = "cpu" |

||

| ) |

Initialize the inference session.

Args:

path (str): Path to the model file or nnpackage directory.

backends (str): Backends to use, default is "cpu".

Reimplemented from package.common.basesession.BaseSession.

Definition at line 16 of file session.py.

References package.infer.session.session.__init__(), nnfw::cker::Conv._prepared, nnfw::cker::FusedBatchNorm._prepared, nnfw::cker::Reduce._prepared, nnfw::ruy::Conv._prepared, package.infer.session.session._prepared, and onert::backend::cpu::ops::DepthwiseConvolutionLayer._prepared.

Referenced by package.infer.session.session.__init__().

Member Function Documentation

◆ _time_block()

|

protected |

Definition at line 167 of file session.py.

Referenced by package.infer.session.session.infer().

◆ _update_inputs_tensorinfo()

|

protected |

Update all input tensors' tensorinfo at once.

Args:

new_infos (list[tensorinfo]): A list of updated tensorinfo objects for the inputs.

Raises:

ValueError: If the number of new_infos does not match the session's input size,

or if any tensorinfo contains a negative dimension.

OnertError: If the underlying C-API call fails.

Definition at line 134 of file session.py.

References validate_onnx2circle.OnnxRunner.session, onert::api::python::NNFW_SESSION.session, and package.common.basesession.BaseSession.session.

Referenced by package.infer.session.session.infer().

◆ infer()

| Union[List[np.ndarray], Tuple[List[np.ndarray], Dict[str, float]]] package.infer.session.session.infer | ( | self, | |

| List[np.ndarray] | inputs_array, | ||

| *bool | measure = False |

||

| ) |

Run a complete inference cycle:

- If the session has not been prepared or outputs have not been set, call prepare().

- Automatically configure input buffers based on the provided numpy arrays.

- Execute the inference session.

- Return the output tensors with proper multi-dimensional shapes.

This method supports dynamic shape modification:

- The input shapes can be adjusted dynamically.

Args:

inputs_array (list[np.ndarray]): List of numpy arrays representing the input data.

measure (bool): If True, measure prepare/io/run latencies (ms).

Returns:

list[np.ndarray]: A list containing the output numpy arrays.

OR

(outputs, metrics): Tuple where metrics is a dict with keys

'prepare_time_ms', 'io_time_ms', 'run_time_ms'

Definition at line 27 of file session.py.

References nnfw::cker::Conv._prepared, nnfw::cker::FusedBatchNorm._prepared, nnfw::cker::Reduce._prepared, nnfw::ruy::Conv._prepared, package.infer.session.session._prepared, onert::backend::cpu::ops::DepthwiseConvolutionLayer._prepared, package.infer.session.session._time_block(), package.infer.session.session._update_inputs_tensorinfo(), package.common.basesession.BaseSession.get_inputs_tensorinfo(), validate_onnx2circle.OnnxRunner.session, onert::api::python::NNFW_SESSION.session, and package.common.basesession.BaseSession.session.

Field Documentation

◆ _prepared

|

protected |

Definition at line 99 of file session.py.

Referenced by package.infer.session.session.__init__(), and package.infer.session.session.infer().

◆ outputs

| package.infer.session.session.outputs |

Definition at line 132 of file session.py.

Referenced by package.common.basesession.BaseSession._set_outputs(), and validate_onnx2circle.OnnxRunner.get_outputs().

The documentation for this class was generated from the following file:

- runtime/onert/api/python/package/infer/session.py