#include <UseDefGenerator.h>

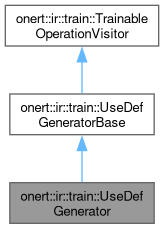

Detailed Description

Definition at line 47 of file UseDefGenerator.h.

Constructor & Destructor Documentation

◆ UseDefGenerator() [1/2]

|

delete |

◆ UseDefGenerator() [2/2]

| onert::ir::train::UseDefGenerator::UseDefGenerator | ( | const TrainableGraph & | tgraph | ) |

Definition at line 31 of file UseDefGenerator.cc.

References onert::ir::train::TrainableGraph::operation(), and onert::ir::train::TrainableGraph::topolSortOperations().

Member Function Documentation

◆ operator()()

| UseDefChains onert::ir::train::UseDefGenerator::operator() | ( | ) |

Definition at line 50 of file UseDefGenerator.cc.

References onert::ir::train::TrainableGraph::graph().

◆ visit() [1/11]

|

override |

Definition at line 70 of file UseDefGenerator.cc.

References onert::ir::operation::BinaryArithmetic::Param::activation, onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getOutputs(), onert::ir::Operation::getUsedInputSet(), onert::ir::NONE, and onert::ir::operation::BinaryArithmetic::param().

◆ visit() [2/11]

|

override |

Definition at line 96 of file UseDefGenerator.cc.

References onert::ir::operation::Conv2D::Param::activation, onert::ir::OperandIndexSequence::at(), onert::ir::operation::Conv2D::BIAS, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::operation::Conv2D::INPUT, onert::ir::operation::Conv2D::KERNEL, onert::ir::NONE, and onert::ir::operation::Conv2D::param().

◆ visit() [3/11]

|

override |

Definition at line 134 of file UseDefGenerator.cc.

References onert::ir::operation::DepthwiseConv2D::Param::activation, onert::ir::OperandIndexSequence::at(), onert::ir::operation::Conv2D::BIAS, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::operation::DepthwiseConv2D::INPUT, onert::ir::operation::DepthwiseConv2D::KERNEL, onert::ir::NONE, and onert::ir::operation::DepthwiseConv2D::param().

◆ visit() [4/11]

|

override |

Definition at line 172 of file UseDefGenerator.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getOutputs(), onert::ir::Operation::getUsedInputSet(), onert::ir::operation::ElementwiseActivation::Param::op_type, onert::ir::operation::ElementwiseActivation::param(), and onert::ir::operation::ElementwiseActivation::RELU.

◆ visit() [5/11]

|

override |

Definition at line 195 of file UseDefGenerator.cc.

References onert::ir::operation::FullyConnected::Param::activation, onert::ir::OperandIndexSequence::at(), onert::ir::operation::Conv2D::BIAS, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::operation::FullyConnected::INPUT, onert::ir::NONE, onert::ir::operation::FullyConnected::param(), and onert::ir::operation::FullyConnected::WEIGHT.

◆ visit() [6/11]

|

override |

Definition at line 233 of file UseDefGenerator.cc.

References onert::util::ObjectManager< Index, Object >::at(), onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::Operation::getUsedInputSet(), onert::ir::train::TrainableGraph::operands(), onert::ir::operation::Loss::Y_PRED, and onert::ir::operation::Loss::Y_TRUE.

◆ visit() [7/11]

|

override |

Definition at line 265 of file UseDefGenerator.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::operation::Pad::INPUT, and onert::ir::operation::Pad::PAD.

◆ visit() [8/11]

|

override |

Definition at line 287 of file UseDefGenerator.cc.

References onert::ir::operation::Pool2D::Param::activation, onert::ir::OperandIndexSequence::at(), onert::ir::operation::Pool2D::AVG, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::operation::Pool2D::INPUT, onert::ir::operation::Pool2D::MAX, onert::ir::NONE, onert::ir::operation::Pool2D::Param::op_type, and onert::ir::operation::Pool2D::param().

◆ visit() [9/11]

|

override |

Definition at line 318 of file UseDefGenerator.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::operation::Reduce::INPUT, onert::ir::operation::Reduce::MEAN, onert::ir::operation::Reduce::param(), and onert::ir::operation::Reduce::Param::reduce_type.

◆ visit() [10/11]

|

override |

Definition at line 340 of file UseDefGenerator.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), and onert::ir::operation::Reduce::INPUT.

◆ visit() [11/11]

|

override |

Definition at line 357 of file UseDefGenerator.cc.

References onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), and onert::ir::operation::Reduce::INPUT.

The documentation for this class was generated from the following files:

- runtime/onert/core/src/ir/train/UseDefGenerator.h

- runtime/onert/core/src/ir/train/UseDefGenerator.cc

Public Member Functions inherited from

Public Member Functions inherited from