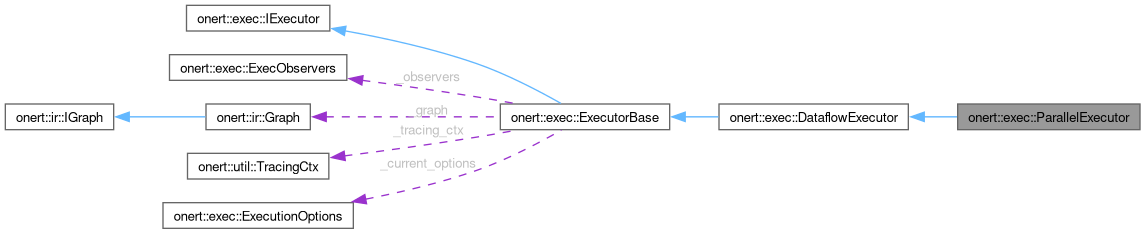

Class to execute Graph in parallel. More...

#include <ParallelExecutor.h>

Public Member Functions | |

| ParallelExecutor (std::unique_ptr< compiler::LoweredGraph > lowered_graph, backend::BackendContexts &&backend_contexts, const compiler::TensorRegistries &tensor_regs, compiler::CodeMap &&code_map, const util::TracingCtx *tracing_ctx) | |

| Constructs a ParallelExecutor object. | |

| void | executeImpl (const ExecutionObservee &subject) override |

Public Member Functions inherited from onert::exec::DataflowExecutor Public Member Functions inherited from onert::exec::DataflowExecutor | |

| DataflowExecutor (std::unique_ptr< compiler::LoweredGraph > lowered_graph, backend::BackendContexts &&backend_contexts, const compiler::TensorRegistries &tensor_regs, compiler::CodeMap &&code_map, const util::TracingCtx *tracing_ctx) | |

| Constructs a DataflowExecutor object. | |

Public Member Functions inherited from onert::exec::ExecutorBase Public Member Functions inherited from onert::exec::ExecutorBase | |

| ExecutorBase (std::unique_ptr< compiler::LoweredGraph > &&lowered_graph, backend::BackendContexts &&backend_contexts, const compiler::TensorRegistries &tensor_regs, const util::TracingCtx *tracing_ctx) | |

| Construct a new ExecutorBase object. | |

| virtual | ~ExecutorBase ()=default |

| const ir::Graph & | graph () const final |

| Returns graph object. | |

| void | execute (const std::vector< backend::IPortableTensor * > &inputs, const std::vector< backend::IPortableTensor * > &outputs, const ExecutionOptions &options) override |

| Execute with given input/output tensors. | |

| uint32_t | inputSize () const override |

| Get input size. | |

| uint32_t | outputSize () const override |

| Get output size. | |

| const ir::OperandInfo & | inputInfo (uint32_t index) const override |

| Get input info at index. | |

| const ir::OperandInfo & | outputInfo (uint32_t index) const override |

| Get output info at index. | |

| const uint8_t * | outputBuffer (uint32_t index) const final |

| Get output buffer at index. | |

| const backend::IPortableTensor * | outputTensor (uint32_t index) const final |

| Get output tensor at index. | |

| void | setIndexedRanks (std::shared_ptr< ir::OperationIndexMap< int64_t > > ranks) final |

| Set an ordering on operations. | |

| void | addObserver (std::unique_ptr< IExecutionObserver > ref) |

| backend::BackendContexts & | getBackendContexts () |

| const ExecutionOptions & | currentOptions () const override |

| Return current execution configuration. | |

Public Member Functions inherited from onert::exec::IExecutor Public Member Functions inherited from onert::exec::IExecutor | |

| IExecutor ()=default | |

| Construct a new IExecutor object. | |

| virtual | ~IExecutor ()=default |

| Destroy the IExecutor object. | |

Protected Member Functions | |

| void | notify (uint32_t finished_job_id) override |

Protected Member Functions inherited from onert::exec::DataflowExecutor Protected Member Functions inherited from onert::exec::DataflowExecutor | |

| bool | noWaitingJobs () |

| int64_t | calculateRank (const std::vector< ir::OperationIndex > &operations) |

| void | emplaceToReadyJobs (const uint32_t &id) |

Protected Member Functions inherited from onert::exec::ExecutorBase Protected Member Functions inherited from onert::exec::ExecutorBase | |

| bool | hasDynamicInput () |

Returns true if any input tensor is dynamic; false if all are static tensors. | |

Additional Inherited Members | |

Protected Attributes inherited from onert::exec::DataflowExecutor Protected Attributes inherited from onert::exec::DataflowExecutor | |

| compiler::CodeMap | _code_map |

| std::vector< std::unique_ptr< Job > > | _finished_jobs |

| A vector of finished jobs for current execution After a run it has all the jobs of this execution for the next run. | |

| std::vector< std::unique_ptr< Job > > | _waiting_jobs |

| A vector of waiting jobs for current execution All the jobs are moved from _finished_jobs to it when start a run. | |

| std::vector< std::list< uint32_t > > | _output_info |

| Jobs' output info Used for notifying after finishing a job. | |

| std::vector< uint32_t > | _initial_input_info |

| std::vector< uint32_t > | _input_info |

| std::multimap< int64_t, std::unique_ptr< Job >, std::greater< int64_t > > | _ready_jobs |

A collection of jobs that are ready for execution Jobs in it are ready to be scheduled. Ordered by priority from _indexed_ranks | |

| std::unordered_map< uint32_t, ir::OperationIndex > | _job_to_op |

| Which job runs which op and function. | |

Protected Attributes inherited from onert::exec::ExecutorBase Protected Attributes inherited from onert::exec::ExecutorBase | |

| ExecObservers | _observers |

| std::shared_ptr< ir::OperationIndexMap< int64_t > > | _indexed_ranks |

| std::unique_ptr< compiler::LoweredGraph > | _lowered_graph |

| backend::BackendContexts | _backend_contexts |

| const ir::Graph & | _graph |

| std::vector< backend::builtin::IOTensor * > | _input_tensors |

| std::vector< backend::builtin::IOTensor * > | _output_tensors |

| std::mutex | _mutex |

| const util::TracingCtx * | _tracing_ctx |

| ExecutionOptions | _current_options |

Detailed Description

Class to execute Graph in parallel.

Definition at line 33 of file ParallelExecutor.h.

Constructor & Destructor Documentation

◆ ParallelExecutor()

| onert::exec::ParallelExecutor::ParallelExecutor | ( | std::unique_ptr< compiler::LoweredGraph > | lowered_graph, |

| backend::BackendContexts && | backend_contexts, | ||

| const compiler::TensorRegistries & | tensor_regs, | ||

| compiler::CodeMap && | code_map, | ||

| const util::TracingCtx * | tracing_ctx | ||

| ) |

Constructs a ParallelExecutor object.

- Parameters

-

lowered_graph LoweredGraph object tensor_builders Tensor builders that are currently used code_map ir::Operationand its code map

Definition at line 60 of file ParallelExecutor.cc.

References VERBOSE.

Member Function Documentation

◆ executeImpl()

|

overridevirtual |

Reimplemented from onert::exec::DataflowExecutor.

Definition at line 71 of file ParallelExecutor.cc.

References onert::exec::DataflowExecutor::_finished_jobs, onert::exec::ExecutorBase::_graph, onert::exec::DataflowExecutor::_initial_input_info, onert::exec::DataflowExecutor::_input_info, onert::exec::DataflowExecutor::_job_to_op, onert::exec::ExecutorBase::_lowered_graph, onert::exec::DataflowExecutor::_ready_jobs, onert::exec::ExecutorBase::_tracing_ctx, onert::exec::DataflowExecutor::_waiting_jobs, onert::exec::DataflowExecutor::emplaceToReadyJobs(), onert::util::TracingCtx::getSubgraphIndex(), onert::exec::ExecutorBase::hasDynamicInput(), notify(), onert::exec::ExecutionObservee::notifyJobBegin(), onert::exec::ExecutionObservee::notifyJobEnd(), onert::exec::ExecutionObservee::notifySubgraphBegin(), onert::exec::ExecutionObservee::notifySubgraphEnd(), onert::exec::DataflowExecutor::noWaitingJobs(), and VERBOSE.

◆ notify()

|

overrideprotectedvirtual |

Reimplemented from onert::exec::DataflowExecutor.

Definition at line 50 of file ParallelExecutor.cc.

References onert::exec::DataflowExecutor::notify().

Referenced by executeImpl().

The documentation for this class was generated from the following files:

- runtime/onert/core/src/exec/ParallelExecutor.h

- runtime/onert/core/src/exec/ParallelExecutor.cc