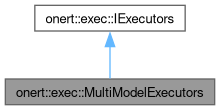

Class to gather executors. More...

#include <MultiModelExecutors.h>

Public Member Functions | |

| MultiModelExecutors (void)=delete | |

| MultiModelExecutors (std::unique_ptr< ir::ModelEdges > model_edges) | |

| MultiModelExecutors (const MultiModelExecutors &)=delete | |

| MultiModelExecutors (MultiModelExecutors &&)=default | |

| ~MultiModelExecutors ()=default | |

| void | emplace (const ir::ModelIndex &model_index, const ir::SubgraphIndex &subg_index, std::unique_ptr< IExecutor > exec) override |

| Insert executor in executor set. | |

| IExecutor * | at (const ir::ModelIndex &model_index, const ir::SubgraphIndex &subg_index) const override |

| Return executor of index. | |

| uint32_t | inputSize () const override |

| Return executor set's number of input. | |

| uint32_t | outputSize () const override |

| Return executor set's number of output. | |

| const ir::OperandInfo & | inputInfo (const ir::IOIndex &index) const override |

| Return NN package input tensor info. | |

| const ir::OperandInfo & | outputInfo (const ir::IOIndex &index) const override |

| Return NN package output tensor info. | |

| const void * | outputBuffer (const ir::IOIndex &index) const final |

| Return NN package output buffer. | |

| const backend::IPortableTensor * | outputTensor (const ir::IOIndex &index) const final |

| Return NN package output tensor. | |

| void | execute (ExecutionContext &ctx) override |

| Execute NN package executor set. | |

Public Member Functions inherited from onert::exec::IExecutors Public Member Functions inherited from onert::exec::IExecutors | |

| virtual | ~IExecutors ()=default |

| Virtual IExecutors destructor. | |

| virtual IExecutor * | entryExecutor () const |

Detailed Description

Class to gather executors.

Definition at line 47 of file MultiModelExecutors.h.

Constructor & Destructor Documentation

◆ MultiModelExecutors() [1/4]

|

delete |

◆ MultiModelExecutors() [2/4]

|

inline |

Definition at line 51 of file MultiModelExecutors.h.

◆ MultiModelExecutors() [3/4]

|

delete |

◆ MultiModelExecutors() [4/4]

|

default |

◆ ~MultiModelExecutors()

|

default |

Member Function Documentation

◆ at()

|

overridevirtual |

Return executor of index.

- Parameters

-

[in] model_index Model index [in] subg_index Subgraph index

- Returns

- Executor

Implements onert::exec::IExecutors.

Definition at line 64 of file MultiModelExecutors.cc.

Referenced by execute(), inputInfo(), outputBuffer(), outputInfo(), and outputTensor().

◆ emplace()

|

overridevirtual |

Insert executor in executor set.

- Parameters

-

[in] model_index Model index [in] subg_index Subgraph index [in] exec Executor to insert

Implements onert::exec::IExecutors.

Definition at line 57 of file MultiModelExecutors.cc.

◆ execute()

|

overridevirtual |

Execute NN package executor set.

- Parameters

-

[in,out] ctx Execution context. It reflects execution result (ex. output shape inference)

Implements onert::exec::IExecutors.

Definition at line 229 of file MultiModelExecutors.cc.

References at(), onert::exec::ExecutionContext::desc, onert::exec::IExecutor::inputSize(), onert::exec::ExecutionContext::options, onert::exec::IODescription::outputs, outputTensor(), and onert::util::Index< T, DummyTag >::value().

◆ inputInfo()

|

overridevirtual |

Return NN package input tensor info.

- Parameters

-

[in] index Input index

- Returns

- Tensor info

Implements onert::exec::IExecutors.

Definition at line 74 of file MultiModelExecutors.cc.

References at(), and onert::util::Index< T, DummyTag >::value().

◆ inputSize()

|

overridevirtual |

Return executor set's number of input.

- Returns

- Number of input

Implements onert::exec::IExecutors.

Definition at line 70 of file MultiModelExecutors.cc.

◆ outputBuffer()

|

finalvirtual |

Return NN package output buffer.

- Parameters

-

[in] index Output index

- Returns

- Buffer of output

Implements onert::exec::IExecutors.

Definition at line 88 of file MultiModelExecutors.cc.

References at(), and onert::util::Index< T, DummyTag >::value().

◆ outputInfo()

|

overridevirtual |

Return NN package output tensor info.

- Parameters

-

[in] index Output index

- Returns

- Tensor info

Implements onert::exec::IExecutors.

Definition at line 81 of file MultiModelExecutors.cc.

References at(), and onert::util::Index< T, DummyTag >::value().

◆ outputSize()

|

overridevirtual |

Return executor set's number of output.

- Returns

- Number of output

Implements onert::exec::IExecutors.

Definition at line 72 of file MultiModelExecutors.cc.

◆ outputTensor()

|

finalvirtual |

Return NN package output tensor.

- Parameters

-

[in] index Output index

- Returns

- Tensor of output

Implements onert::exec::IExecutors.

Definition at line 95 of file MultiModelExecutors.cc.

References at(), and onert::util::Index< T, DummyTag >::value().

Referenced by execute().

The documentation for this class was generated from the following files:

- runtime/onert/core/src/exec/MultiModelExecutors.h

- runtime/onert/core/src/exec/MultiModelExecutors.cc