Loading...

Searching...

No Matches

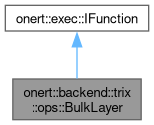

onert::backend::trix::ops::BulkLayer Class Reference

#include <BulkLayer.h>

Collaboration diagram for onert::backend::trix::ops::BulkLayer:

Public Member Functions | |

| BulkLayer () | |

| ~BulkLayer () | |

| void | configure (const std::vector< const IPortableTensor * > &inputs, std::vector< IPortableTensor * > &outputs, std::string binary_path, const std::shared_ptr< DevContext > &dev_context) |

| void | run () override |

| void | prepare () override |

Public Member Functions inherited from onert::exec::IFunction Public Member Functions inherited from onert::exec::IFunction | |

| virtual | ~IFunction ()=default |

Detailed Description

Definition at line 28 of file BulkLayer.h.

Constructor & Destructor Documentation

◆ BulkLayer()

| onert::backend::trix::ops::BulkLayer::BulkLayer | ( | ) |

Definition at line 24 of file BulkLayer.cc.

24 : _inputs(), _outputs(), _model_id(0), _dev_context(nullptr)

25{

26 // DO NOTHING

27}

◆ ~BulkLayer()

| onert::backend::trix::ops::BulkLayer::~BulkLayer | ( | ) |

Definition at line 29 of file BulkLayer.cc.

29{ _dev_context->unRegisterModel(_model_id); }

Member Function Documentation

◆ configure()

| void onert::backend::trix::ops::BulkLayer::configure | ( | const std::vector< const IPortableTensor * > & | inputs, |

| std::vector< IPortableTensor * > & | outputs, | ||

| std::string | binary_path, | ||

| const std::shared_ptr< DevContext > & | dev_context | ||

| ) |

Definition at line 31 of file BulkLayer.cc.

34{

35 _inputs = inputs;

36 _outputs = outputs;

37 _dev_context = dev_context;

38 _model_id = _dev_context->registerModel(binary_path);

39}

◆ prepare()

|

overridevirtual |

Reimplemented from onert::exec::IFunction.

Definition at line 62 of file BulkLayer.cc.

63{

64 // DO NOTHING

65}

◆ run()

|

overridevirtual |

Implements onert::exec::IFunction.

Definition at line 41 of file BulkLayer.cc.

42{

43 tensors_data_info in_info;

44 tensors_data_info out_info;

45 setDataInfo(_inputs, &in_info);

46 setDataInfo(_outputs, &out_info);

47

48 input_buffers input_bufs;

49 output_buffers output_bufs;

50 setBuffers(_inputs, &input_bufs);

51 setBuffers(_outputs, &output_bufs);

52

53 size_t batch_size = 1;

54 // TODO Remove this assumption

55 if (_inputs.size() == 1 && _outputs.size() == 1 && _inputs.at(0)->getShape().dim(0) > 1)

56 {

57 batch_size = _inputs.at(0)->getShape().dim(0);

58 }

59 _dev_context->requestRun(_model_id, &input_bufs, &in_info, &output_bufs, &out_info, batch_size);

60}

void setBuffers(const std::vector< T * > &tensors, generic_buffers *buf)

Set the generic_buffers object.

Definition Convert.h:65

void setDataInfo(const std::vector< T * > &tensors, tensors_data_info *info)

Set the tensors_data_info object.

Definition Convert.h:46

References onert::backend::trix::setBuffers(), and onert::backend::trix::setDataInfo().

The documentation for this class was generated from the following files:

- runtime/onert/backend/trix/ops/BulkLayer.h

- runtime/onert/backend/trix/ops/BulkLayer.cc