#include <KernelGenerator.h>

Additional Inherited Members | |

Protected Attributes inherited from onert::backend::train::KernelGeneratorBase Protected Attributes inherited from onert::backend::train::KernelGeneratorBase | |

| const ir::train::TrainableGraph & | _tgraph |

| std::unique_ptr< exec::train::ITrainableFunction > | _return_fn |

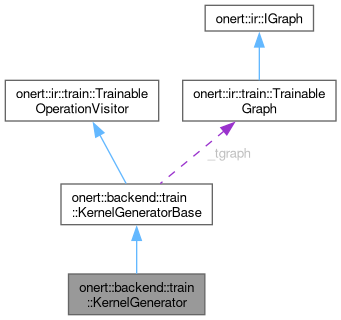

Detailed Description

Definition at line 35 of file KernelGenerator.h.

Constructor & Destructor Documentation

◆ KernelGenerator()

| onert::backend::train::KernelGenerator::KernelGenerator | ( | const ir::train::TrainableGraph & | tgraph, |

| const std::shared_ptr< TensorRegistry > & | tensor_reg, | ||

| const std::shared_ptr< ExternalContext > & | external_context, | ||

| const exec::train::optimizer::Optimizer * | optimizer | ||

| ) |

Definition at line 142 of file KernelGenerator.cc.

References onert::util::ObjectManager< Index, Object >::iterate(), and onert::ir::train::TrainableGraph::operations().

Member Function Documentation

◆ generate()

|

overridevirtual |

Implements onert::backend::train::KernelGeneratorBase.

Definition at line 110 of file KernelGenerator.cc.

References onert::backend::train::KernelGeneratorBase::_return_fn, onert::backend::train::KernelGeneratorBase::_tgraph, onert::ir::train::TrainableGraph::operation(), and onert::ir::UNDEFINED.

◆ visit() [1/11]

|

override |

Definition at line 156 of file KernelGenerator.cc.

References onert::backend::train::KernelGeneratorBase::_return_fn, onert::ir::operation::BinaryArithmetic::Param::activation, onert::ir::operation::BinaryArithmetic::Param::arithmetic_type, onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::train::TrainableOperation::isRequiredForBackward(), and onert::ir::operation::BinaryArithmetic::param().

◆ visit() [2/11]

|

override |

Definition at line 187 of file KernelGenerator.cc.

References onert::backend::train::KernelGeneratorBase::_return_fn, onert::backend::train::KernelGeneratorBase::_tgraph, onert::ir::operation::Conv2D::Param::activation, onert::util::ObjectManager< Index, Object >::at(), onert::ir::OperandIndexSequence::at(), bias_tensor, onert::ir::calculatePadding(), onert::ir::operation::Conv2D::Param::dilation, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::train::TrainableOperation::isRequiredForBackward(), ker_tensor, onert::ir::train::TrainableGraph::operands(), onert::ir::operation::Conv2D::Param::padding, onert::ir::operation::Conv2D::param(), and onert::ir::operation::Conv2D::Param::stride.

◆ visit() [3/11]

|

override |

Definition at line 248 of file KernelGenerator.cc.

References onert::backend::train::KernelGeneratorBase::_return_fn, onert::backend::train::KernelGeneratorBase::_tgraph, onert::ir::operation::DepthwiseConv2D::Param::activation, onert::util::ObjectManager< Index, Object >::at(), onert::ir::OperandIndexSequence::at(), bias_tensor, onert::ir::calculatePadding(), onert::ir::operation::DepthwiseConv2D::Param::dilation, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::Dilation::height_factor, onert::ir::train::TrainableOperation::isRequiredForBackward(), ker_tensor, onert::ir::operation::DepthwiseConv2D::Param::multiplier, onert::ir::train::TrainableGraph::operands(), onert::ir::operation::DepthwiseConv2D::Param::padding, onert::ir::operation::DepthwiseConv2D::param(), onert::ir::operation::DepthwiseConv2D::Param::stride, and onert::ir::Dilation::width_factor.

◆ visit() [4/11]

|

override |

Definition at line 304 of file KernelGenerator.cc.

References onert::backend::train::KernelGeneratorBase::_return_fn, onert::ir::operation::ElementwiseActivation::Param::alpha, onert::ir::operation::ElementwiseActivation::Param::approximate, onert::ir::OperandIndexSequence::at(), onert::ir::operation::ElementwiseActivation::Param::beta, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::train::TrainableOperation::isRequiredForBackward(), onert::backend::cpu::ops::kReLU, onert::ir::operation::ElementwiseActivation::Param::op_type, onert::ir::operation::ElementwiseActivation::param(), onert::ir::operation::ElementwiseActivation::RELU, and type.

◆ visit() [5/11]

|

override |

Definition at line 342 of file KernelGenerator.cc.

References onert::backend::train::KernelGeneratorBase::_return_fn, onert::ir::operation::FullyConnected::Param::activation, onert::ir::OperandIndexSequence::at(), bias_tensor, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::train::TrainableOperation::isRequiredForBackward(), onert::ir::operation::FullyConnected::param(), and onert::ir::operation::FullyConnected::Param::weights_format.

◆ visit() [6/11]

|

override |

Definition at line 387 of file KernelGenerator.cc.

References onert::backend::train::KernelGeneratorBase::_return_fn, onert::ir::OperandIndexSequence::at(), onert::ir::train::CategoricalCrossentropy, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::train::LossInfo::loss_code, onert::ir::train::LossInfo::loss_param, onert::ir::train::MeanSquaredError, onert::ir::train::operation::Loss::param(), onert::ir::train::LossInfo::reduction_type, and onert::ir::train::operation::Loss::y_pred_op_code().

◆ visit() [7/11]

|

override |

Definition at line 439 of file KernelGenerator.cc.

References onert::backend::train::KernelGeneratorBase::_return_fn, onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::operation::Pad::INPUT, onert::ir::train::TrainableOperation::isRequiredForBackward(), onert::ir::operation::Pad::PAD, onert::ir::OperandIndexSequence::size(), and onert::ir::operation::Pad::VALUE.

◆ visit() [8/11]

|

override |

Definition at line 468 of file KernelGenerator.cc.

References onert::backend::train::KernelGeneratorBase::_return_fn, onert::backend::train::KernelGeneratorBase::_tgraph, onert::ir::operation::Pool2D::Param::activation, onert::util::ObjectManager< Index, Object >::at(), onert::ir::OperandIndexSequence::at(), onert::ir::calculatePadding(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::train::TrainableOperation::isRequiredForBackward(), onert::backend::cpu::ops::kAvg, onert::backend::train::ops::kAvg, onert::ir::operation::Pool2D::Param::kh, onert::backend::cpu::ops::kMax, onert::backend::train::ops::kMax, onert::ir::operation::Pool2D::Param::kw, onert::ir::operation::Pool2D::name(), onert::ir::operation::Pool2D::Param::op_type, onert::ir::train::TrainableGraph::operands(), onert::ir::operation::Pool2D::Param::padding, onert::ir::operation::Pool2D::param(), and onert::ir::operation::Pool2D::Param::stride.

◆ visit() [9/11]

|

override |

Definition at line 527 of file KernelGenerator.cc.

References onert::backend::train::KernelGeneratorBase::_return_fn, onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::train::TrainableOperation::isRequiredForBackward(), onert::ir::operation::Reduce::Param::keep_dims, onert::ir::operation::Reduce::MEAN, onert::ir::operation::Reduce::param(), and onert::ir::operation::Reduce::Param::reduce_type.

◆ visit() [10/11]

|

override |

Definition at line 559 of file KernelGenerator.cc.

References onert::backend::train::KernelGeneratorBase::_return_fn, onert::ir::OperandIndexSequence::at(), onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::operation::Reshape::INPUT, onert::ir::train::TrainableOperation::isRequiredForBackward(), onert::ir::operation::Reshape::SHAPE, and onert::ir::OperandIndexSequence::size().

◆ visit() [11/11]

|

override |

Definition at line 590 of file KernelGenerator.cc.

References onert::backend::train::KernelGeneratorBase::_return_fn, onert::ir::OperandIndexSequence::at(), onert::ir::operation::Softmax::Param::beta, onert::ir::Operation::getInputs(), onert::ir::Operation::getOutputs(), onert::ir::operation::Softmax::INPUT, onert::ir::train::TrainableOperation::isRequiredForBackward(), and onert::ir::operation::Softmax::param().

The documentation for this class was generated from the following files:

- runtime/onert/backend/train/KernelGenerator.h

- runtime/onert/backend/train/KernelGenerator.cc