Loading...

Searching...

No Matches

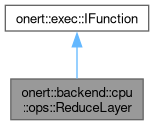

onert::backend::cpu::ops::ReduceLayer Class Reference

#include <ReduceLayer.h>

Collaboration diagram for onert::backend::cpu::ops::ReduceLayer:

Public Member Functions | |

| ReduceLayer () | |

| ~ReduceLayer () | |

| void | configure (const IPortableTensor *input, const IPortableTensor *axes, IPortableTensor *output, ReduceType reduceType, bool keep_dims) |

| void | run () override |

Public Member Functions inherited from onert::exec::IFunction Public Member Functions inherited from onert::exec::IFunction | |

| virtual | ~IFunction ()=default |

| virtual void | prepare () |

Detailed Description

Definition at line 46 of file ReduceLayer.h.

Constructor & Destructor Documentation

◆ ReduceLayer()

| onert::backend::cpu::ops::ReduceLayer::ReduceLayer | ( | ) |

Definition at line 216 of file ReduceLayer.cc.

218 _kernel(), _reduceType(ReduceType::kInvalid)

219{

220 // DO NOTHING

221}

Definition Reduce.h:231

◆ ~ReduceLayer()

|

default |

Member Function Documentation

◆ configure()

| void onert::backend::cpu::ops::ReduceLayer::configure | ( | const IPortableTensor * | input, |

| const IPortableTensor * | axes, | ||

| IPortableTensor * | output, | ||

| ReduceType | reduceType, | ||

| bool | keep_dims | ||

| ) |

Definition at line 225 of file ReduceLayer.cc.

227{

228 _input = input;

229 _axes = axes;

230 _output = output;

231 _reduceType = reduceType;

232

233 switch (_reduceType)

234 {

237 {

238 _kernel = std::bind(&evalSumQuantized, std::placeholders::_1, std::placeholders::_2,

239 std::placeholders::_3, keep_dims, *_reduce_kernel);

240 return;

241 }

242 _kernel = generateKernelGeneric(_input, keep_dims, *_reduce_kernel, ReduceType::kSum);

243 break;

245 _kernel = generateKernelGeneric(_input, keep_dims, *_reduce_kernel, ReduceType::kProd);

246 break;

248 _kernel = generateKernelGeneric(_input, keep_dims, *_reduce_kernel, ReduceType::kMax);

249 break;

251 _kernel = generateKernelGeneric(_input, keep_dims, *_reduce_kernel, ReduceType::kMin);

252 break;

254 _kernel = generateKernelGeneric(_input, keep_dims, *_reduce_kernel, ReduceType::kAny);

255 break;

257 _kernel = generateKernelGeneric(_input, keep_dims, *_reduce_kernel, ReduceType::kAll);

258 break;

259 default:

260 throw std::runtime_error{"Reduce: Unsupported reduce type"};

261 }

262}

ir::DataType data_type() const override final

Definition IPortableTensor.h:54

References onert::backend::IPortableTensor::data_type(), onert::backend::cpu::ops::kAll, onert::backend::cpu::ops::kAny, onert::backend::cpu::ops::kMax, onert::backend::cpu::ops::kMin, onert::backend::cpu::ops::kProd, and onert::backend::cpu::ops::kSum.

◆ run()

|

overridevirtual |

Implements onert::exec::IFunction.

Definition at line 264 of file ReduceLayer.cc.

265{

267#ifdef USE_NEON

268 int32_t rank = _input->getShape().rank();

270 axes.size() == 1 && (axes[0] == -1 || axes[0] == rank - 1))

271 {

272 OptimizedReduceSum(getBuffer<float>(_input), getShape(_input), getBuffer<float>(_output));

273 return;

274 }

275#endif // NEON

276 _kernel(_input, _output, axes);

277}

nnfw::cker::Shape getShape(const IPortableTensor *tensor)

Definition OperationUtils.h:89

std::vector< int32_t > getReducerAxes(const IPortableTensor *axes)

Definition OperationUtils.cc:278

References onert::backend::IPortableTensor::data_type(), onert::backend::cpu::ops::getReducerAxes(), onert::backend::IPortableTensor::getShape(), onert::backend::cpu::ops::getShape(), and onert::backend::cpu::ops::kSum.

The documentation for this class was generated from the following files:

- runtime/onert/backend/cpu/ops/ReduceLayer.h

- runtime/onert/backend/cpu/ops/ReduceLayer.cc