Loading...

Searching...

No Matches

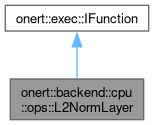

onert::backend::cpu::ops::L2NormLayer Class Reference

#include <L2NormalizationLayer.h>

Collaboration diagram for onert::backend::cpu::ops::L2NormLayer:

Public Member Functions | |

| L2NormLayer () | |

| void | configure (const IPortableTensor *_input, IPortableTensor *output) |

| void | run () override |

Public Member Functions inherited from onert::exec::IFunction Public Member Functions inherited from onert::exec::IFunction | |

| virtual | ~IFunction ()=default |

| virtual void | prepare () |

Detailed Description

Definition at line 26 of file L2NormalizationLayer.h.

Constructor & Destructor Documentation

◆ L2NormLayer()

|

inline |

Definition at line 29 of file L2NormalizationLayer.h.

29 : _input(nullptr), _output(nullptr)

30 {

31 // Nothing

32 }

Member Function Documentation

◆ configure()

| void onert::backend::cpu::ops::L2NormLayer::configure | ( | const IPortableTensor * | _input, |

| IPortableTensor * | output | ||

| ) |

Definition at line 51 of file L2NormalizationLayer.cc.

◆ run()

|

overridevirtual |

Implements onert::exec::IFunction.

Definition at line 60 of file L2NormalizationLayer.cc.

61{

63 {

64 case OperandType::FLOAT32:

66 getBuffer<float>(_output));

67 break;

68

69 case OperandType::QUANT_UINT8_ASYMM:

70 {

71 nnfw::cker::L2NormParams params;

72 assert(_input->data_zero_point() == 128);

75 getShape(_output), getBuffer<uint8_t>(_output));

76 }

77 break;

78

79 default:

80 throw std::runtime_error{"L2Norm: Unsupported data type"};

81 }

82}

int32_t data_zero_point() const override final

Definition IPortableTensor.h:56

ir::DataType data_type() const override final

Definition IPortableTensor.h:54

void L2NormalizeFloat32(const Shape &input_shape, const float *input_data, const Shape &output_shape, float *output_data)

Definition L2Normalize.h:30

void L2NormalizeQuant8(L2NormParams ¶ms, const Shape &input_shape, const uint8_t *input_data, const Shape &output_shape, uint8_t *output_data)

Definition L2Normalize.h:56

nnfw::cker::Shape getShape(const IPortableTensor *tensor)

Definition OperationUtils.h:89

Definition Types.h:279

References onert::backend::IPortableTensor::data_type(), onert::backend::IPortableTensor::data_zero_point(), onert::backend::cpu::ops::getShape(), nnfw::cker::L2NormParams::input_zero_point, nnfw::cker::L2NormalizeFloat32(), and nnfw::cker::L2NormalizeQuant8().

The documentation for this class was generated from the following files:

- runtime/onert/backend/cpu/ops/L2NormalizationLayer.h

- runtime/onert/backend/cpu/ops/L2NormalizationLayer.cc