Loading...

Searching...

No Matches

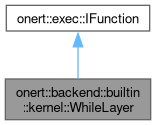

onert::backend::builtin::kernel::WhileLayer Class Reference

#include <WhileLayer.h>

Collaboration diagram for onert::backend::builtin::kernel::WhileLayer:

Public Member Functions | |

| WhileLayer (const std::vector< backend::IPortableTensor * > input_tensors, const std::vector< backend::IPortableTensor * > output_tensors, const ir::SubgraphIndex &cond_subg_index, const ir::SubgraphIndex &body_subg_index, exec::IExecutors *executors, const ir::ModelIndex &model_index, basic::DynamicMemoryManager *dyn_memory_manager, const std::shared_ptr< ExternalContext > &external_context) | |

| void | run () override |

Public Member Functions inherited from onert::exec::IFunction Public Member Functions inherited from onert::exec::IFunction | |

| virtual | ~IFunction ()=default |

| virtual void | prepare () |

Detailed Description

Definition at line 32 of file WhileLayer.h.

Constructor & Destructor Documentation

◆ WhileLayer()

| onert::backend::builtin::kernel::WhileLayer::WhileLayer | ( | const std::vector< backend::IPortableTensor * > | input_tensors, |

| const std::vector< backend::IPortableTensor * > | output_tensors, | ||

| const ir::SubgraphIndex & | cond_subg_index, | ||

| const ir::SubgraphIndex & | body_subg_index, | ||

| exec::IExecutors * | executors, | ||

| const ir::ModelIndex & | model_index, | ||

| basic::DynamicMemoryManager * | dyn_memory_manager, | ||

| const std::shared_ptr< ExternalContext > & | external_context | ||

| ) |

Definition at line 29 of file WhileLayer.cc.

36 : _cond_subg_index{cond_subg_index}, _body_subg_index{body_subg_index},

37 _input_tensors{input_tensors}, _output_tensors{output_tensors}, _executors{executors},

38 _model_index{model_index}, _dyn_memory_manager{dyn_memory_manager},

39 _external_context{external_context}

40{

41 // At this point, executors may not have executors of cond subg and body subg

42}

Member Function Documentation

◆ run()

|

overridevirtual |

Implements onert::exec::IFunction.

Definition at line 44 of file WhileLayer.cc.

45{

46 // Copy "_input_tensors" -> "cond subg inputs"

47 // Run cond subg

48 // Start loop while output of cond subg is ture

49 // // Copy "_input_tensors" -> "body subg inputs" in the first iteration, then copy "body subg

50 // outputs" -> "body subg inputs" in the second or more iterations

51 // // Run body subg

52 // // Copy "body subg outputs" -> "cond subg inputs"

53 // // Run cond subg

54 // If there is no loop copy "_input_tensors" -> "_dst_tensors", else copy "cond subg inputs" ->

55 // "_dst_tensors"

58

59 // Need a temp tensor to hold the cond subgraph output

60 assert(cond_exec->outputSize() == 1);

61 auto cond_output_tensor = [&]() {

63 tensor->set_dynamic();

66 }();

67

70 cond_exec->execute(_input_tensors, {cond_output_tensor.get()}, options);

72

74 bool ret = false;

76 return ret;

77 };

78

79 std::vector<ITensor *> op_inputs(_input_tensors.begin(), _input_tensors.end());

80 std::vector<ITensor *> op_outputs(_output_tensors.begin(), _output_tensors.end());

81 std::vector<ir::PermuteType> permute_types;

82 // Layout in graph is always NHWC, so layout is not changed

83 for (uint32_t i = 0; i < op_outputs.size(); i++)

84 permute_types.emplace_back(ir::PermuteType::SAME);

85 // Copying body inputs to outputs when the loop body is never executed

86 if (!getResultCond(cond_output_tensor.get()))

87 {

88 PermuteLayer copy_body_inputs_to_op_outputs{op_inputs, op_outputs, permute_types,

89 _external_context};

90 copy_body_inputs_to_op_outputs.run();

91 return;

92 }

93

94 // Need some temp tensors to hold the body subgraph output

95 std::vector<std::unique_ptr<Tensor>> temp_outputs_o;

96 std::vector<IPortableTensor *> temp_outputs;

97 for (uint32_t i = 0; i < body_exec->outputSize(); i++)

98 {

100 tensor->set_dynamic();

102 temp_outputs.push_back(tensor.get());

103 temp_outputs_o.push_back(std::move(tensor));

104 }

105

106 std::vector<ITensor *> body_outputs(temp_outputs.begin(), temp_outputs.end());

107 PermuteLayer copy_body_outputs_to_op_outputs{body_outputs, op_outputs, permute_types,

108 _external_context};

109

110 const auto body_execute_with_op_inputs = [&]() {

112 body_exec->execute(_input_tensors, temp_outputs, options);

114 };

115

116 const auto body_execute_with_body_outputs = [&]() {

118 body_exec->execute(_output_tensors, temp_outputs, options);

120 };

121

122 std::function<void()> body_execute = body_execute_with_op_inputs;

123 const auto cond_execute = [&]() {

125 cond_exec->execute(_output_tensors, {cond_output_tensor.get()}, options);

127 };

128

129 // Loop while Cond subgraph's output is true

130 while (getResultCond(cond_output_tensor.get()))

131 {

132 body_execute();

133 copy_body_outputs_to_op_outputs.run();

134 cond_execute();

135 body_execute = body_execute_with_body_outputs;

136 }

137

138 // Clean-up the temp tensors

139 _dyn_memory_manager->deallocate(cond_output_tensor.get());

140 for (auto &&tensor : temp_outputs)

141 {

142 _dyn_memory_manager->deallocate(tensor);

143 }

144}

std::shared_ptr< Allocator > allocate(const ITensor *tensor, uint32_t capacity)

Definition MemoryManager.cc:71

void deallocate(const ITensor *tensor)

Definition MemoryManager.cc:82

virtual IExecutor * entryExecutor() const

Definition IExecutors.h:58

virtual IExecutor * at(const ir::ModelIndex &model_index, const ir::SubgraphIndex &subg_index) const =0

Return executor of index.

virtual const ExecutionOptions & currentOptions() const =0

Return current execution configuration.

References onert::backend::basic::DynamicMemoryManager::allocate(), onert::exec::IExecutors::at(), onert::exec::IExecutor::currentOptions(), onert::backend::basic::DynamicMemoryManager::deallocate(), onert::exec::IExecutors::entryExecutor(), onert::ir::SAME, and VERBOSE.

The documentation for this class was generated from the following files:

- runtime/onert/core/src/backend/builtin/kernel/WhileLayer.h

- runtime/onert/core/src/backend/builtin/kernel/WhileLayer.cc