#include <PermuteLayer.h>

Public Member Functions | |

| PermuteLayer (const std::vector< ITensor * > &src_tensors, const std::vector< ITensor * > &dst_tensors, const std::vector< ir::PermuteType > &types, const std::shared_ptr< ExternalContext > &external_context) | |

| void | optimize () override |

| void | run () override |

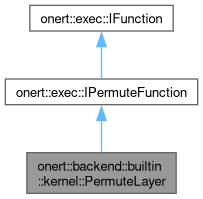

Public Member Functions inherited from onert::exec::IPermuteFunction Public Member Functions inherited from onert::exec::IPermuteFunction | |

| virtual void | prepare () override |

Public Member Functions inherited from onert::exec::IFunction Public Member Functions inherited from onert::exec::IFunction | |

| virtual | ~IFunction ()=default |

Additional Inherited Members | |

Protected Member Functions inherited from onert::exec::IPermuteFunction Protected Member Functions inherited from onert::exec::IPermuteFunction | |

| void | permute (backend::ITensor *src_tensor, backend::ITensor *dst_tensor, size_t rank, std::vector< size_t > &src_offsets, std::vector< size_t > &dst_offsets, const ir::PermuteType &permute_type) |

| const std::type_info & | underlying_type (ir::DataType type) const |

Protected Attributes inherited from onert::exec::IPermuteFunction Protected Attributes inherited from onert::exec::IPermuteFunction | |

| std::vector< backend::ITensor * > | _src_tensors |

| std::vector< backend::ITensor * > | _dst_tensors |

| std::vector< std::vector< size_t > > | _src_tensors_offsets |

| std::vector< std::vector< size_t > > | _dst_tensors_offsets |

| std::vector< ir::PermuteType > | _permute_types |

| std::unordered_map< const backend::ITensor *, std::vector< uint8_t > > | _buffers_map |

Detailed Description

Definition at line 28 of file PermuteLayer.h.

Constructor & Destructor Documentation

◆ PermuteLayer()

| onert::backend::builtin::kernel::PermuteLayer::PermuteLayer | ( | const std::vector< ITensor * > & | src_tensors, |

| const std::vector< ITensor * > & | dst_tensors, | ||

| const std::vector< ir::PermuteType > & | types, | ||

| const std::shared_ptr< ExternalContext > & | external_context | ||

| ) |

Definition at line 24 of file PermuteLayer.cc.

References onert::exec::IPermuteFunction::_dst_tensors, onert::exec::IPermuteFunction::_dst_tensors_offsets, onert::exec::IPermuteFunction::_permute_types, onert::exec::IPermuteFunction::_src_tensors, and onert::exec::IPermuteFunction::_src_tensors_offsets.

Member Function Documentation

◆ optimize()

|

overridevirtual |

Implements onert::exec::IPermuteFunction.

Reimplemented in onert::backend::builtin::train::kernel::PermuteLayer.

Definition at line 40 of file PermuteLayer.cc.

References onert::exec::IPermuteFunction::_dst_tensors, onert::exec::IPermuteFunction::_dst_tensors_offsets, onert::exec::IPermuteFunction::_permute_types, onert::exec::IPermuteFunction::_src_tensors, onert::exec::IPermuteFunction::_src_tensors_offsets, dst_tensor, onert::ir::NCHW_TO_NHWC, onert::ir::NHWC_TO_NCHW, onert::ir::SAME, onert::ir::sizeOfDataType(), src_tensor, and onert::exec::IPermuteFunction::underlying_type().

Referenced by onert::backend::builtin::train::kernel::PermuteLayer::optimize().

◆ run()

|

overridevirtual |

Reimplemented from onert::exec::IPermuteFunction.

Definition at line 184 of file PermuteLayer.cc.

References onert::exec::IPermuteFunction::_buffers_map, onert::exec::IPermuteFunction::_dst_tensors, onert::exec::IPermuteFunction::_dst_tensors_offsets, onert::exec::IPermuteFunction::_permute_types, onert::exec::IPermuteFunction::_src_tensors, onert::exec::IPermuteFunction::_src_tensors_offsets, onert::ir::convertShape(), dst_tensor, onert::exec::IPermuteFunction::permute(), onert::ir::SAME, src_tensor, and onert::exec::IPermuteFunction::underlying_type().

Referenced by onert::backend::builtin::train::kernel::PermuteLayer::forward().

The documentation for this class was generated from the following files:

- runtime/onert/core/src/backend/builtin/kernel/PermuteLayer.h

- runtime/onert/core/src/backend/builtin/kernel/PermuteLayer.cc