Loading...

Searching...

No Matches

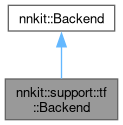

nnkit::support::tf::Backend Class Referencefinal

#include <Backend.h>

Collaboration diagram for nnkit::support::tf::Backend:

Public Member Functions | |

| Backend ()=delete | |

| Backend (const Backend &)=delete | |

| Backend (Backend &&)=delete | |

| Backend (const char *pb_path, const char *info_path) | |

| void | prepare (const std::function< void(nnkit::TensorContext &)> &f) override |

| void | run (void) override |

| void | teardown (const std::function< void(nnkit::TensorContext &)> &f) override |

Public Member Functions inherited from nnkit::Backend Public Member Functions inherited from nnkit::Backend | |

| virtual | ~Backend ()=default |

| virtual void | prepare (const std::function< void(TensorContext &)> &f)=0 |

| virtual void | teardown (const std::function< void(TensorContext &)> &f)=0 |

Detailed Description

Constructor & Destructor Documentation

◆ Backend() [1/4]

|

delete |

◆ Backend() [2/4]

|

delete |

◆ Backend() [3/4]

|

delete |

◆ Backend() [4/4]

| nnkit::support::tf::Backend::Backend | ( | const char * | pb_path, |

| const char * | info_path | ||

| ) |

Definition at line 40 of file Backend.cpp.

40 : _tf_runner(pb_path)

41{

43 for (auto &parsed_tensor : parsed_tensors)

44 {

46 {

47 // user didn't specify input

48 if (!parsed_tensor->hasShape())

49 {

50 angkor::TensorShape shape;

53 "Info you provided may be wrong or not enough. Please check the info file.");

54

55 parsed_tensor->mutable_shape().resize(shape.rank());

57 {

58 parsed_tensor->mutable_shape().dim(r) = shape.dim(r);

59 }

60 }

61 _inputs.emplace_back(std::move(parsed_tensor));

62 }

63 else

64 _outputs.emplace_back(std::move(parsed_tensor));

65 }

66}

Definition Shape.h:34

bool getTensorShapeFromGraphDef(const std::unique_ptr< ParsedTensor > &tensor, angkor::TensorShape &shape)

Get tensor shape from GraphDef for input tensor only.

Definition Runner.cpp:168

std::vector< std::unique_ptr< ParsedTensor > > parse(const char *info_path)

Function to parse test.info.

Definition TensorInfoParser.cpp:204

References nncc::core::ADT::tensor::Shape::dim(), nnkit::support::tf::Runner::getTensorShapeFromGraphDef(), nnkit::support::tftestinfo::ParsedTensor::Input, nnkit::support::tftestinfo::parse(), and nncc::core::ADT::tensor::Shape::rank().

Member Function Documentation

◆ prepare()

|

override |

Definition at line 68 of file Backend.cpp.

69{

70 for (const auto &input_tensor : _inputs)

71 _data_map.allocate(input_tensor.get());

72

73 TensorContext ctx(_inputs, _data_map);

74 f(ctx); // fill values

75

76 _tf_runner.prepareInputs(_inputs, _data_map);

77 _tf_runner.prepareOutputs(_outputs);

78}

void prepareInputs(const std::vector< std::unique_ptr< ParsedTensor > > &inputs, TensorDataMap &data_map)

Definition Runner.cpp:242

void prepareOutputs(const std::vector< std::unique_ptr< ParsedTensor > > &outputs)

Definition Runner.cpp:274

KnobTrait< K >::ValueType get(void)

References nnkit::support::tf::TensorDataMap::allocate(), nnkit::support::tf::Runner::prepareInputs(), and nnkit::support::tf::Runner::prepareOutputs().

◆ run()

|

overridevirtual |

Implements nnkit::Backend.

Definition at line 80 of file Backend.cpp.

81{

82 _tf_runner.run();

83

84 // get result

86

87 for (int n = 0; n < _outputs.size(); n++)

88 {

89 auto actual = actual_outputs[n];

90 const size_t byte_size = TF_TensorByteSize(actual);

91 const uint8_t *tf_data = reinterpret_cast<const uint8_t *>(TF_TensorData(actual));

92

93 const uint32_t shape_rank = TF_NumDims(actual);

94 _outputs[n]->mutable_shape().resize(shape_rank);

96 {

97 _outputs[n]->mutable_shape().dim(r) = TF_Dim(actual, r);

98 }

100

101 std::memcpy(dest, tf_data, byte_size);

102 }

103}

uint8_t * allocate(const ParsedTensor *parsed_tensor)

Definition TensorDataMap.h:48

References nnkit::support::tf::TensorDataMap::allocate(), nnkit::support::tf::Runner::output(), and nnkit::support::tf::Runner::run().

◆ teardown()

|

override |

Definition at line 105 of file Backend.cpp.

106{

107 TensorContext ctx(_outputs, _data_map);

108 f(ctx);

109}

The documentation for this class was generated from the following files:

- compiler/nnkit-tf/support/include/nnkit/support/tf/Backend.h

- compiler/nnkit-tf/support/src/Backend.cpp