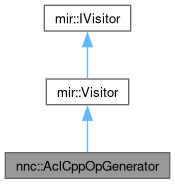

Implements the visitor for the model IR which generates the DOM description translated to C++ source/header files by the ACL soft backend code generators. More...

#include <AclCppOpGenerator.h>

Public Member Functions | |

| AclCppOpGenerator (const std::string &name, std::ostream &par_out) | |

| const ArtifactModule & | generate (mir::Graph *g) |

| The main interface function to the class. Convers the model IR to the DOM. | |

| void | visit (mir::ops::AddOp &op) override |

| Implementations of the MIR visitors. | |

| void | visit (mir::ops::AvgPool2DOp &op) override |

| void | visit (mir::ops::CappedReluOp &op) override |

| void | visit (mir::ops::ConcatOp &op) override |

| void | visit (mir::ops::ConstantOp &op) override |

| void | visit (mir::ops::Conv2DOp &op) override |

| void | visit (mir::ops::DeConv2DOp &op) override |

| void | visit (mir::ops::DepthwiseConv2DOp &op) override |

| void | visit (mir::ops::DivOp &op) override |

| void | visit (mir::ops::EluOp &op) override |

| void | visit (mir::ops::FullyConnectedOp &op) override |

| void | visit (mir::ops::GatherOp &op) override |

| void | visit (mir::ops::InputOp &op) override |

| void | visit (mir::ops::LeakyReluOp &op) override |

| void | visit (mir::ops::MaxOp &op) override |

| void | visit (mir::ops::MaxPool2DOp &op) override |

| void | visit (mir::ops::MulOp &op) override |

| void | visit (mir::ops::OutputOp &op) override |

| void | visit (mir::ops::PadOp &op) override |

| void | visit (mir::ops::ReluOp &op) override |

| void | visit (mir::ops::ReshapeOp &op) override |

| void | visit (mir::ops::ResizeOp &op) override |

| void | visit (mir::ops::SigmoidOp &op) override |

| void | visit (mir::ops::SliceOp &op) override |

| void | visit (mir::ops::SoftmaxOp &op) override |

| void | visit (mir::ops::SqrtOp &op) override |

| void | visit (mir::ops::SqueezeOp &op) override |

| void | visit (mir::ops::SubOp &op) override |

| void | visit (mir::ops::TanhOp &op) override |

| void | visit (mir::ops::TransposeOp &op) override |

| template<typename Op > | |

| shared_ptr< ArtifactVariable > | genPadStrideInfo (const Op &op, const string &prefix, ArtifactBlock *block) |

| template<typename Op > | |

| void | genConvolution (Op &op, const string &acl_func_name, const string &suffix) |

| template<typename T > | |

| std::shared_ptr< ArtifactId > | genVectorInitializedVar (ArtifactBlock *block, const string &type, const string &name, const vector< T > &init) |

Public Member Functions inherited from mir::IVisitor Public Member Functions inherited from mir::IVisitor | |

| virtual | ~IVisitor ()=default |

Protected Member Functions | |

| void | visit_fallback (mir::Operation &op) override |

Detailed Description

Implements the visitor for the model IR which generates the DOM description translated to C++ source/header files by the ACL soft backend code generators.

Definition at line 37 of file AclCppOpGenerator.h.

Constructor & Destructor Documentation

◆ AclCppOpGenerator()

| nnc::AclCppOpGenerator::AclCppOpGenerator | ( | const std::string & | name, |

| std::ostream & | par_out | ||

| ) |

Definition at line 35 of file AclCppOpGenerator.cpp.

Member Function Documentation

◆ genConvolution()

| void nnc::AclCppOpGenerator::genConvolution | ( | Op & | op, |

| const string & | acl_func_name, | ||

| const string & | suffix | ||

| ) |

Definition at line 494 of file AclCppOpGenerator.cpp.

◆ generate()

| const ArtifactModule & nnc::AclCppOpGenerator::generate | ( | mir::Graph * | g | ) |

The main interface function to the class. Convers the model IR to the DOM.

- Parameters

-

g - pointer the model IR graph.

- Returns

- - reference to the top-level DOM entity.

Definition at line 41 of file AclCppOpGenerator.cpp.

References nnc::ArtifactModule::addHeaderInclude(), nnc::ArtifactModule::addHeaderSysInclude(), nnc::ArtifactBlock::call(), nnc::ArtifactFactory::call(), nnc::ArtifactModule::createClass(), nnc::ArtifactBlock::ifCond(), nnc::ArtifactFactory::lit(), nnc::ArtifactModule::name(), and nnc::scope.

Referenced by nnc::AclCppCodeGenerator::run(), TEST(), TEST(), TEST(), TEST(), TEST(), TEST(), TEST(), TEST(), and TEST().

◆ genPadStrideInfo()

| shared_ptr< ArtifactVariable > nnc::AclCppOpGenerator::genPadStrideInfo | ( | const Op & | op, |

| const string & | prefix, | ||

| ArtifactBlock * | block | ||

| ) |

Definition at line 203 of file AclCppOpGenerator.cpp.

References mir::Shape::dim(), mir::Shape::rank(), and nnc::ArtifactBlock::var().

◆ genVectorInitializedVar()

| std::shared_ptr< ArtifactId > nnc::AclCppOpGenerator::genVectorInitializedVar | ( | ArtifactBlock * | block, |

| const string & | type, | ||

| const string & | name, | ||

| const vector< T > & | init | ||

| ) |

Definition at line 715 of file AclCppOpGenerator.cpp.

References type, and nnc::ArtifactBlock::var().

◆ visit() [1/30]

|

override |

Implementations of the MIR visitors.

- Parameters

-

op

Definition at line 958 of file AclCppOpGenerator.cpp.

References mir::Operation::getInput(), mir::Operation::getNumInputs(), and mir::Operation::getOutput().

◆ visit() [2/30]

|

override |

Definition at line 253 of file AclCppOpGenerator.cpp.

References mir::ops::AvgPool2DOp::getIncludePad().

◆ visit() [3/30]

|

override |

Definition at line 301 of file AclCppOpGenerator.cpp.

References mir::ops::CappedReluOp::getCap().

◆ visit() [4/30]

|

override |

Definition at line 87 of file AclCppOpGenerator.cpp.

References nnc::ArtifactBlock::call(), mir::ops::ConcatOp::getAxis(), mir::Operation::getInputs(), mir::Operation::getOutput(), nnc::ArtifactFactory::id(), nnc::ArtifactFactory::ref(), and nnc::ArtifactBlock::var().

◆ visit() [5/30]

|

override |

Definition at line 338 of file AclCppOpGenerator.cpp.

References mir::Operation::getOutput(), and mir::ops::ConstantOp::getValue().

◆ visit() [6/30]

|

override |

Definition at line 116 of file AclCppOpGenerator.cpp.

References mir::ops::Conv2DOp::getNumGroups().

◆ visit() [7/30]

|

override |

Definition at line 392 of file AclCppOpGenerator.cpp.

◆ visit() [8/30]

|

override |

Definition at line 122 of file AclCppOpGenerator.cpp.

◆ visit() [9/30]

|

override |

Definition at line 976 of file AclCppOpGenerator.cpp.

◆ visit() [10/30]

|

override |

Definition at line 397 of file AclCppOpGenerator.cpp.

◆ visit() [11/30]

|

override |

Definition at line 264 of file AclCppOpGenerator.cpp.

References mir::Operation::getInput(), mir::Operation::getNumInputs(), mir::Operation::getOutput(), and mir::TensorVariant::getShape().

◆ visit() [12/30]

|

override |

Definition at line 941 of file AclCppOpGenerator.cpp.

◆ visit() [13/30]

|

override |

Definition at line 306 of file AclCppOpGenerator.cpp.

References mir::Operation::getOutput().

◆ visit() [14/30]

|

override |

Definition at line 948 of file AclCppOpGenerator.cpp.

References mir::ops::LeakyReluOp::getAlpha().

◆ visit() [15/30]

|

override |

Definition at line 978 of file AclCppOpGenerator.cpp.

◆ visit() [16/30]

|

override |

Definition at line 258 of file AclCppOpGenerator.cpp.

◆ visit() [17/30]

|

override |

Definition at line 980 of file AclCppOpGenerator.cpp.

References mir::Operation::getInput(), mir::Operation::getNumInputs(), and mir::Operation::getOutput().

◆ visit() [18/30]

|

override |

Definition at line 953 of file AclCppOpGenerator.cpp.

◆ visit() [19/30]

|

override |

Definition at line 402 of file AclCppOpGenerator.cpp.

References mir::Operation::getInput(), mir::Operation::getNumInputs(), mir::Operation::getOutput(), mir::ops::PadOp::getPaddingAfter(), mir::ops::PadOp::getPaddingBefore(), and mir::ops::PadOp::getPaddingValue().

◆ visit() [20/30]

|

override |

Definition at line 349 of file AclCppOpGenerator.cpp.

◆ visit() [21/30]

|

override |

Definition at line 351 of file AclCppOpGenerator.cpp.

References mir::Shape::dim(), mir::Operation::getInput(), mir::Operation::getNumInputs(), mir::Operation::getOutput(), and mir::Shape::rank().

◆ visit() [22/30]

|

override |

Definition at line 877 of file AclCppOpGenerator.cpp.

◆ visit() [23/30]

|

override |

Definition at line 946 of file AclCppOpGenerator.cpp.

◆ visit() [24/30]

|

override |

Definition at line 385 of file AclCppOpGenerator.cpp.

◆ visit() [25/30]

|

override |

Definition at line 127 of file AclCppOpGenerator.cpp.

References mir::Shape::dim(), mir::ops::SoftmaxOp::getAxis(), mir::Operation::getInput(), mir::Operation::getNumInputs(), and mir::Operation::getOutput().

◆ visit() [26/30]

|

override |

Definition at line 834 of file AclCppOpGenerator.cpp.

◆ visit() [27/30]

|

override |

Definition at line 829 of file AclCppOpGenerator.cpp.

◆ visit() [28/30]

|

override |

Definition at line 998 of file AclCppOpGenerator.cpp.

◆ visit() [29/30]

|

override |

Definition at line 390 of file AclCppOpGenerator.cpp.

◆ visit() [30/30]

|

override |

Definition at line 921 of file AclCppOpGenerator.cpp.

References mir::ops::TransposeOp::getAxisOrder(), mir::Operation::getInput(), mir::Operation::getNumInputs(), and mir::Operation::getOutput().

◆ visit_fallback()

|

overrideprotectedvirtual |

Reimplemented from mir::Visitor.

Definition at line 1000 of file AclCppOpGenerator.cpp.

The documentation for this class was generated from the following files:

- compiler/nnc/backends/acl_soft_backend/AclCppOpGenerator.h

- compiler/nnc/backends/acl_soft_backend/AclCppOpGenerator.cpp