#include <If.h>

Public Member Functions | |

| If (const Tensor *cond, const std::vector< const Tensor * > &inputs, std::vector< Tensor * > outputs, RuntimeGraph *then_graph, RuntimeGraph *else_graph) | |

| const Tensor * | cond () const |

| const Tensor * | input (int index) const |

| Tensor * | output (int index) const |

| void | configure () override |

| void | execute () const override |

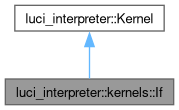

Public Member Functions inherited from luci_interpreter::Kernel Public Member Functions inherited from luci_interpreter::Kernel | |

| virtual | ~Kernel ()=default |

| const std::vector< const Tensor * > & | getInputTensors () const |

| const std::vector< Tensor * > & | getOutputTensors () const |

Additional Inherited Members | |

Protected Member Functions inherited from luci_interpreter::Kernel Protected Member Functions inherited from luci_interpreter::Kernel | |

| Kernel (std::vector< const Tensor * > inputs, std::vector< Tensor * > outputs) | |

Protected Attributes inherited from luci_interpreter::Kernel Protected Attributes inherited from luci_interpreter::Kernel | |

| const std::vector< const Tensor * > | _inputs |

| const std::vector< Tensor * > | _outputs |

Detailed Description

Constructor & Destructor Documentation

◆ If()

| luci_interpreter::kernels::If::If | ( | const Tensor * | cond, |

| const std::vector< const Tensor * > & | inputs, | ||

| std::vector< Tensor * > | outputs, | ||

| RuntimeGraph * | then_graph, | ||

| RuntimeGraph * | else_graph | ||

| ) |

Definition at line 35 of file If.cpp.

Member Function Documentation

◆ cond()

Definition at line 34 of file If.h.

References luci_interpreter::Kernel::_inputs.

Referenced by configure(), and execute().

◆ configure()

|

overridevirtual |

Implements luci_interpreter::Kernel.

Definition at line 42 of file If.cpp.

References cond(), luci_interpreter::Kernel::getInputTensors(), luci_interpreter::Kernel::getOutputTensors(), LUCI_INTERPRETER_CHECK, luci::must_cast(), and size.

◆ execute()

|

overridevirtual |

Implements luci_interpreter::Kernel.

Definition at line 55 of file If.cpp.

References cond(), luci_interpreter::Tensor::data(), luci_interpreter::getDataTypeSize(), luci_interpreter::Kernel::getInputTensors(), luci_interpreter::RuntimeGraph::getInputTensors(), luci_interpreter::Kernel::getOutputTensors(), input(), LUCI_INTERPRETER_CHECK, luci::must_cast(), luci_interpreter::Shape::num_elements(), output(), luci_interpreter::Tensor::resize(), and luci_interpreter::Tensor::shape().

◆ input()

Definition at line 35 of file If.h.

References luci_interpreter::Kernel::_inputs.

Referenced by execute().

◆ output()

|

inline |

Definition at line 36 of file If.h.

References luci_interpreter::Kernel::_outputs.

Referenced by execute().

The documentation for this class was generated from the following files: