Constructs inference sequence for given computational graph, gathers list of variables used in artifact. More...

#include <ModelAnalyzer.h>

Public Member Functions | |

| void | analyze (const mir::Graph *g) |

| contructs inference sequence | |

| void | visit (mir::ops::AbsOp &) override |

| void | visit (mir::ops::AddOp &op) override |

| void | visit (mir::ops::AvgPool2DOp &op) override |

| void | visit (mir::ops::BroadcastOp &op) override |

| void | visit (mir::ops::CappedReluOp &op) override |

| void | visit (mir::ops::ConcatOp &op) override |

| void | visit (mir::ops::ConstantOp &op) override |

| void | visit (mir::ops::Conv2DOp &op) override |

| void | visit (mir::ops::DeConv2DOp &op) override |

| void | visit (mir::ops::DepthwiseConv2DOp &op) override |

| void | visit (mir::ops::DivOp &op) override |

| void | visit (mir::ops::EluOp &op) override |

| void | visit (mir::ops::FullyConnectedOp &op) override |

| void | visit (mir::ops::GatherOp &op) override |

| void | visit (mir::ops::InputOp &op) override |

| void | visit (mir::ops::LeakyReluOp &op) override |

| void | visit (mir::ops::MaxOp &op) override |

| void | visit (mir::ops::MaxPool2DOp &op) override |

| void | visit (mir::ops::MulOp &op) override |

| void | visit (mir::ops::OutputOp &op) override |

| void | visit (mir::ops::PadOp &op) override |

| void | visit (mir::ops::ReduceMeanOp &op) override |

| void | visit (mir::ops::ReluOp &op) override |

| void | visit (mir::ops::ReshapeOp &op) override |

| void | visit (mir::ops::ResizeOp &op) override |

| void | visit (mir::ops::SigmoidOp &op) override |

| void | visit (mir::ops::SliceOp &op) override |

| void | visit (mir::ops::SoftmaxOp &op) override |

| void | visit (mir::ops::SqrtOp &op) override |

| void | visit (mir::ops::SqueezeOp &op) override |

| void | visit (mir::ops::SubOp &op) override |

| void | visit (mir::ops::TanhOp &op) override |

| void | visit (mir::ops::TransposeOp &op) override |

| const std::vector< size_t > & | getInputs () const |

| const std::vector< size_t > & | getPersistentTensors () const |

| const std::vector< size_t > & | getOutputs () const |

| const std::vector< sir::TensorDescriptor > & | getTensors () const |

| const std::vector< std::unique_ptr< sir::Action > > & | getInferenceSequence () const |

| std::vector< std::unique_ptr< sir::Action > > & | getInferenceSequence () |

| const std::string & | getModelName () const |

| size_t | getMaxTemporarySize () const |

| size_t | getTempTID () const |

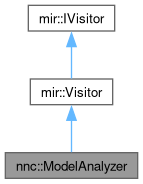

Public Member Functions inherited from mir::IVisitor Public Member Functions inherited from mir::IVisitor | |

| virtual | ~IVisitor ()=default |

Protected Member Functions | |

| void | visit_fallback (mir::Operation &op) override |

Detailed Description

Constructs inference sequence for given computational graph, gathers list of variables used in artifact.

Definition at line 41 of file ModelAnalyzer.h.

Member Function Documentation

◆ analyze()

| void nnc::ModelAnalyzer::analyze | ( | const mir::Graph * | g | ) |

contructs inference sequence

- Parameters

-

g pointer to graph to linearize

Definition at line 231 of file ModelAnalyzer.cpp.

References mir::Operation::getOutputs().

Referenced by nnc::CPPCodeGenerator::run(), and TEST().

◆ getInferenceSequence() [1/2]

|

inline |

- Returns

- Inference sequence

Definition at line 115 of file ModelAnalyzer.h.

◆ getInferenceSequence() [2/2]

|

inline |

- Returns

- Inference sequence

Definition at line 107 of file ModelAnalyzer.h.

Referenced by nnc::CPPCodeGenerator::run(), and TEST().

◆ getInputs()

|

inline |

- Returns

- vector of id's of network input tensors

Definition at line 87 of file ModelAnalyzer.h.

◆ getMaxTemporarySize()

|

inline |

Definition at line 122 of file ModelAnalyzer.h.

◆ getModelName()

|

inline |

- Returns

- Model name, taken from Model IR

Definition at line 120 of file ModelAnalyzer.h.

◆ getOutputs()

|

inline |

- Returns

- vector of id's of network output tensors

Definition at line 97 of file ModelAnalyzer.h.

◆ getPersistentTensors()

|

inline |

- Returns

- vector of id's of tensors with unique names taken from Model IR

Definition at line 92 of file ModelAnalyzer.h.

◆ getTempTID()

|

inline |

Definition at line 124 of file ModelAnalyzer.h.

◆ getTensors()

|

inline |

- Returns

- vector of all network tensors

Definition at line 102 of file ModelAnalyzer.h.

◆ visit() [1/33]

|

override |

Definition at line 423 of file ModelAnalyzer.cpp.

◆ visit() [2/33]

|

override |

Definition at line 425 of file ModelAnalyzer.cpp.

◆ visit() [3/33]

|

override |

Definition at line 320 of file ModelAnalyzer.cpp.

◆ visit() [4/33]

|

override |

Definition at line 329 of file ModelAnalyzer.cpp.

◆ visit() [5/33]

|

override |

Definition at line 331 of file ModelAnalyzer.cpp.

◆ visit() [6/33]

|

override |

Definition at line 300 of file ModelAnalyzer.cpp.

◆ visit() [7/33]

|

override |

Definition at line 339 of file ModelAnalyzer.cpp.

References mir::Operation::getNumInputs(), and mir::Operation::getOutput().

◆ visit() [8/33]

|

override |

Definition at line 302 of file ModelAnalyzer.cpp.

References mir::Shape::dim(), mir::Operation::getInputShape(), mir::ops::Conv2DOp::getNumGroups(), and mir::Operation::getOutputShape().

◆ visit() [9/33]

|

override |

Definition at line 386 of file ModelAnalyzer.cpp.

References mir::Shape::dim(), mir::Operation::getInputShape(), and mir::Operation::getOutputShape().

◆ visit() [10/33]

|

override |

Definition at line 313 of file ModelAnalyzer.cpp.

◆ visit() [11/33]

|

override |

Definition at line 430 of file ModelAnalyzer.cpp.

◆ visit() [12/33]

|

override |

Definition at line 384 of file ModelAnalyzer.cpp.

◆ visit() [13/33]

|

override |

Definition at line 324 of file ModelAnalyzer.cpp.

◆ visit() [14/33]

|

override |

Definition at line 412 of file ModelAnalyzer.cpp.

◆ visit() [15/33]

|

override |

Definition at line 333 of file ModelAnalyzer.cpp.

References mir::Operation::getNumInputs().

◆ visit() [16/33]

|

override |

Definition at line 416 of file ModelAnalyzer.cpp.

◆ visit() [17/33]

|

override |

Definition at line 435 of file ModelAnalyzer.cpp.

◆ visit() [18/33]

|

override |

Definition at line 322 of file ModelAnalyzer.cpp.

◆ visit() [19/33]

|

override |

Definition at line 440 of file ModelAnalyzer.cpp.

◆ visit() [20/33]

|

override |

Definition at line 421 of file ModelAnalyzer.cpp.

◆ visit() [21/33]

|

override |

Definition at line 400 of file ModelAnalyzer.cpp.

◆ visit() [22/33]

|

override |

Definition at line 402 of file ModelAnalyzer.cpp.

◆ visit() [23/33]

|

override |

Definition at line 352 of file ModelAnalyzer.cpp.

◆ visit() [24/33]

|

override |

Definition at line 354 of file ModelAnalyzer.cpp.

◆ visit() [25/33]

|

override |

Definition at line 356 of file ModelAnalyzer.cpp.

References mir::Operation::getInputShape(), mir::ops::ResizeOp::getMode(), mir::Operation::getOutputShape(), and mir::ops::ResizeOp::nearestNeighbor.

◆ visit() [26/33]

|

override |

Definition at line 414 of file ModelAnalyzer.cpp.

◆ visit() [27/33]

|

override |

Definition at line 377 of file ModelAnalyzer.cpp.

◆ visit() [28/33]

|

override |

Definition at line 318 of file ModelAnalyzer.cpp.

◆ visit() [29/33]

|

override |

Definition at line 398 of file ModelAnalyzer.cpp.

◆ visit() [30/33]

|

override |

Definition at line 396 of file ModelAnalyzer.cpp.

◆ visit() [31/33]

|

override |

Definition at line 445 of file ModelAnalyzer.cpp.

◆ visit() [32/33]

|

override |

Definition at line 379 of file ModelAnalyzer.cpp.

◆ visit() [33/33]

|

override |

Definition at line 407 of file ModelAnalyzer.cpp.

◆ visit_fallback()

|

overrideprotectedvirtual |

Reimplemented from mir::Visitor.

Definition at line 450 of file ModelAnalyzer.cpp.

The documentation for this class was generated from the following files:

- compiler/nnc/backends/soft_backend/ModelAnalyzer.h

- compiler/nnc/backends/soft_backend/ModelAnalyzer.cpp