Loading...

Searching...

No Matches

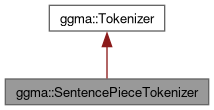

ggma::SentencePieceTokenizer Class Reference

Collaboration diagram for ggma::SentencePieceTokenizer:

Public Member Functions | |

| std::string | id () const |

| size_t | tokenize (const char *text, size_t text_len, int32_t *tokens, size_t max_tokens, size_t *n_tokens) const override |

| size_t | detokenize (const int32_t *tokens, size_t n_tokens, char *text, size_t text_len) const override |

Static Public Member Functions | |

| static Tokenizer * | create (const std::string &tokenizer_dir) |

Detailed Description

Definition at line 26 of file TokenizerSentencePiece.cc.

Member Function Documentation

◆ create()

|

static |

Definition at line 40 of file TokenizerSentencePiece.cc.

41{

42 auto tokenizer = std::make_unique<SentencePieceTokenizer>();

43 tokenizer->_processor = std::make_unique<::sentencepiece::SentencePieceProcessor>();

44

47

48 return status.ok() ? tokenizer.release() : nullptr;

49}

◆ detokenize()

|

overridevirtual |

Implements ggma::Tokenizer.

Definition at line 96 of file TokenizerSentencePiece.cc.

98{

99 if (!tokens || !text || n_tokens == 0 || text_len == 0 || !_processor)

100 return 0;

101

102 std::vector<int> piece_ids;

103 for (size_t i = 0; i < n_tokens; ++i)

104 piece_ids.push_back(tokens[i]);

105

106 std::string decoded_text;

107 auto status = _processor->Decode(piece_ids, &decoded_text);

108 if (!status.ok())

109 return 0;

110

111 size_t copy_len = std::min(decoded_text.length(), text_len - 1);

112 memcpy(text, decoded_text.c_str(), copy_len);

113 text[copy_len] = '\0';

114

115 return copy_len;

116}

◆ id()

|

inlinevirtual |

Implements ggma::Tokenizer.

Definition at line 29 of file TokenizerSentencePiece.cc.

29{ return "sentencepiece"; }

◆ tokenize()

|

overridevirtual |

Implements ggma::Tokenizer.

Definition at line 51 of file TokenizerSentencePiece.cc.

53{

54 if (!text || !tokens || !n_tokens || max_tokens == 0 || !_processor)

55 {

56 if (n_tokens)

57 *n_tokens = 0;

58 return 0;

59 }

60

61 std::string input_text(text, text_len);

62 std::vector<int> piece_ids;

63

64 auto status = _processor->Encode(input_text, &piece_ids);

65 if (!status.ok())

66 {

67 if (n_tokens)

68 *n_tokens = 0;

69 return 0;

70 }

71

72 // Initialize tokens array to 0

73 std::fill(tokens, tokens + max_tokens, 0);

74

75 // Check BOS token ID and add it if it's 1

76 int bos_id = _processor->bos_id();

77 size_t bos_offset = 0;

78

79 // TODO: Make BOS token prepending configurable

80 if (bos_id >= 0 && max_tokens > 0)

81 {

82 tokens[0] = bos_id; // Add BOS token

83 bos_offset = 1; // Start actual tokens from index 1

84 }

85

86 size_t available_space = max_tokens - bos_offset;

87 size_t token_count = std::min(piece_ids.size(), available_space);

88

89 for (size_t i = 0; i < token_count; ++i)

90 tokens[bos_offset + i] = static_cast<int32_t>(piece_ids[i]);

91

92 *n_tokens = bos_offset + token_count;

93 return bos_offset + token_count;

94}

The documentation for this class was generated from the following file:

- runtime/ggma/src/tokenize/TokenizerSentencePiece.cc